Core Concepts Of Recurrent Neural Networks Training Ppt

These slides give an introduction to Recurrent Neural Networks. A Recurrent Neural Network RNN is a subtype of Artificial Neural Network ANN that works with time series or sequential data. This set of PPT slides also discusses RNNs importance, types, and variants Bidirectional Recurrent Neural Networks, Long Short Term Memory, and Gated Recurrent Units, along with the advantages and disadvantages.

These slides give an introduction to Recurrent Neural Networks. A Recurrent Neural Network RNN is a subtype of Artificial N..

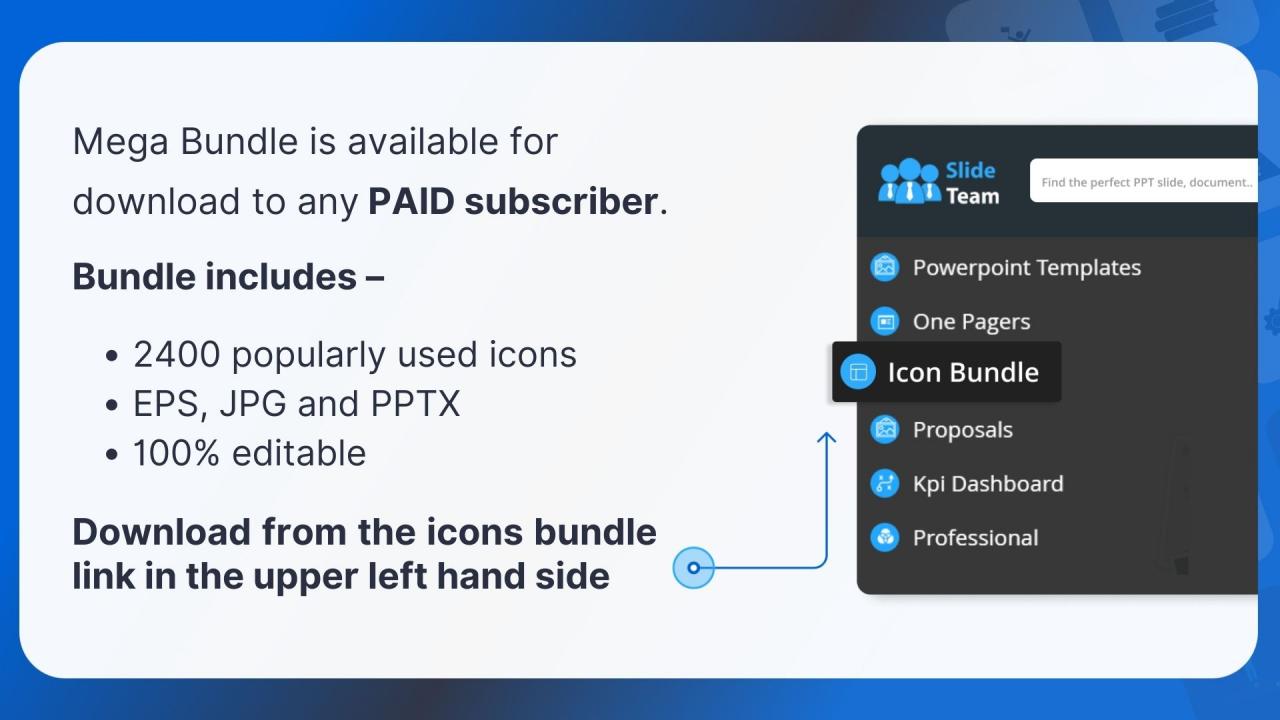

- Google Slides is a new FREE Presentation software from Google.

- All our content is 100% compatible with Google Slides.

- Just download our designs, and upload them to Google Slides and they will work automatically.

- Amaze your audience with SlideTeam and Google Slides.

-

Want Changes to This PPT Slide? Check out our Presentation Design Services

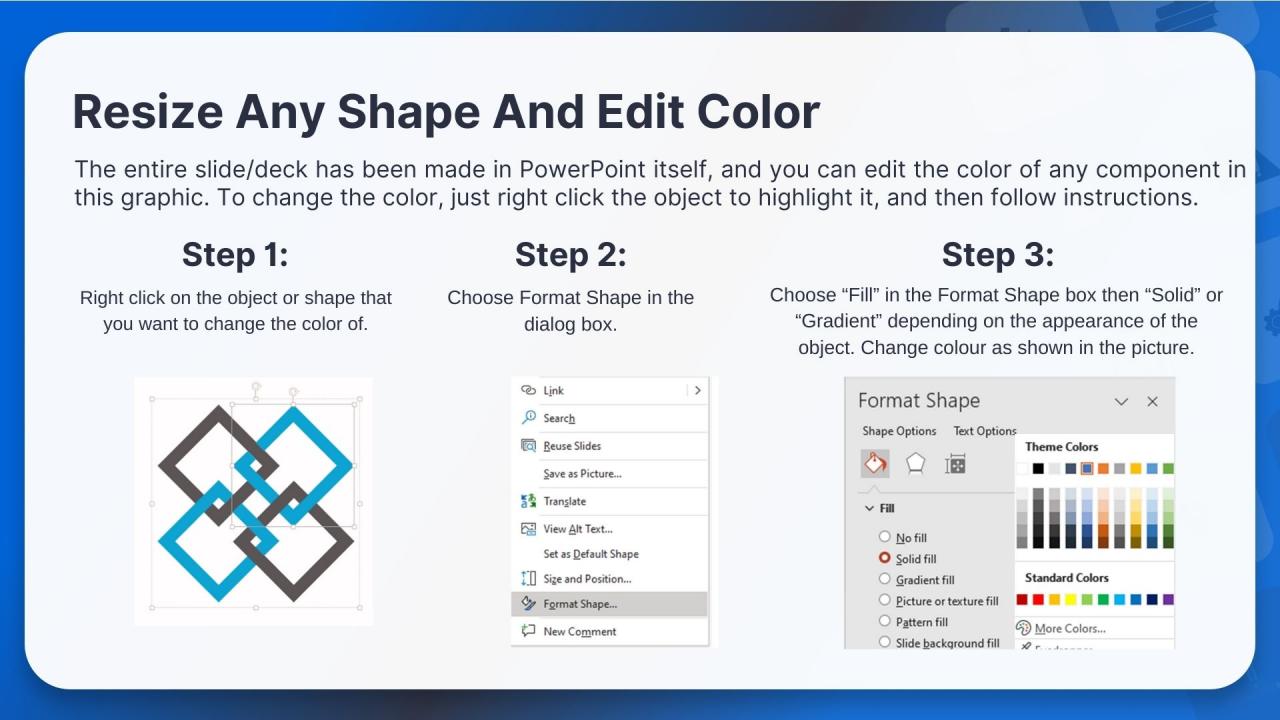

- WideScreen Aspect ratio is becoming a very popular format. When you download this product, the downloaded ZIP will contain this product in both standard and widescreen format.

-

- Some older products that we have may only be in standard format, but they can easily be converted to widescreen.

- To do this, please open the SlideTeam product in Powerpoint, and go to

- Design ( On the top bar) -> Page Setup -> and select "On-screen Show (16:9)” in the drop down for "Slides Sized for".

- The slide or theme will change to widescreen, and all graphics will adjust automatically. You can similarly convert our content to any other desired screen aspect ratio.

Compatible With Google Slides

Get This In WideScreen

You must be logged in to download this presentation.

PowerPoint presentation slides

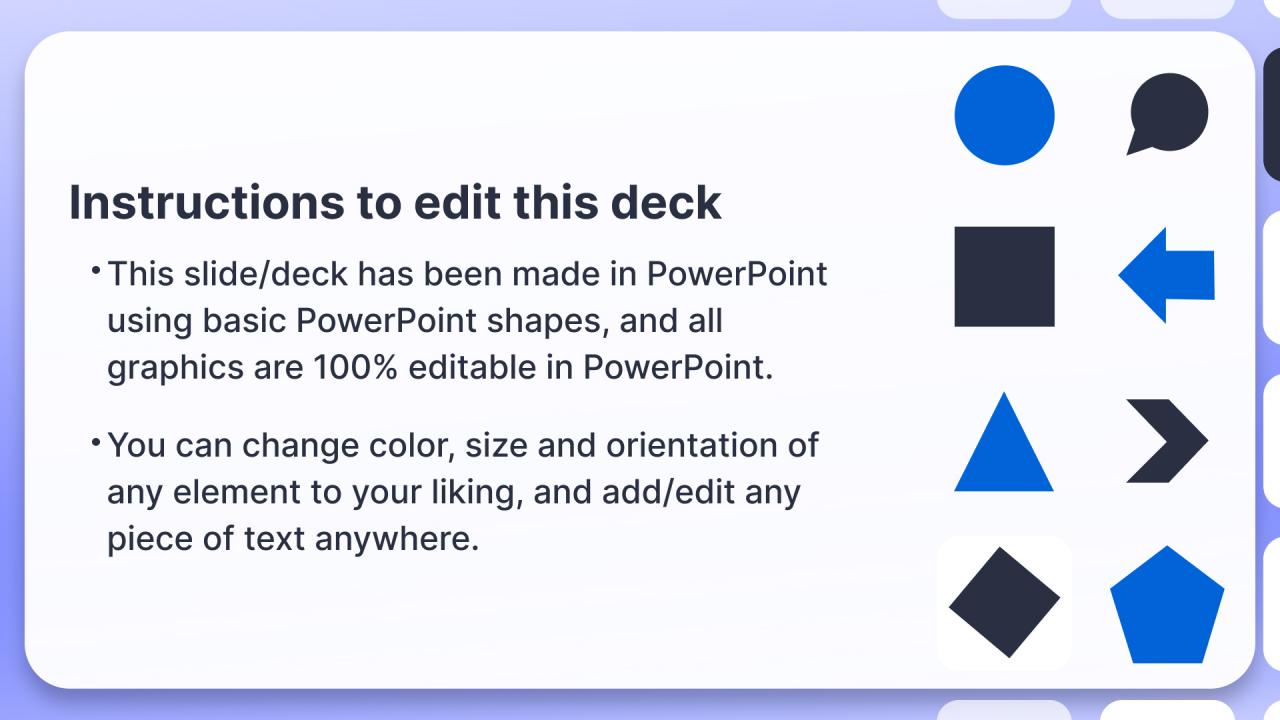

Presenting Core Concepts of Recurrent Neural Networks. These slides are 100 percent made in PowerPoint and are compatible with all screen types and monitors. They also support Google Slides. Premium Customer Support available. Suitable for use by managers, employees, and organizations. These slides are easily customizable. You can edit the color, text, icon, and font size to suit your requirements.

People who downloaded this PowerPoint presentation also viewed the following :

Content of this Powerpoint Presentation

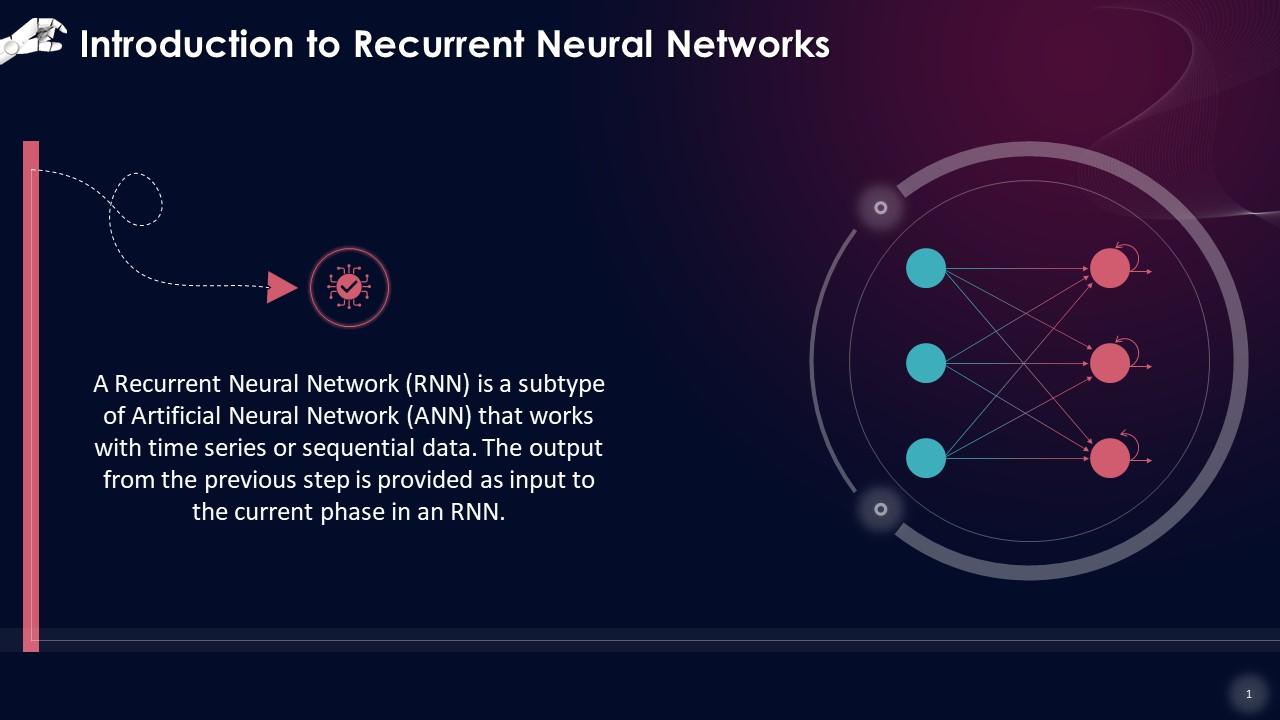

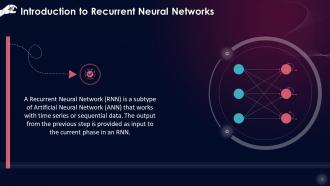

Slide 1

This slide gives an introduction to Recurrent Neural Networks. A Recurrent Neural Network (RNN) is a subtype of Artificial Neural Network (ANN) that works with time series or sequential data. The output from the previous step is provided as input to the current phase in an RNN.

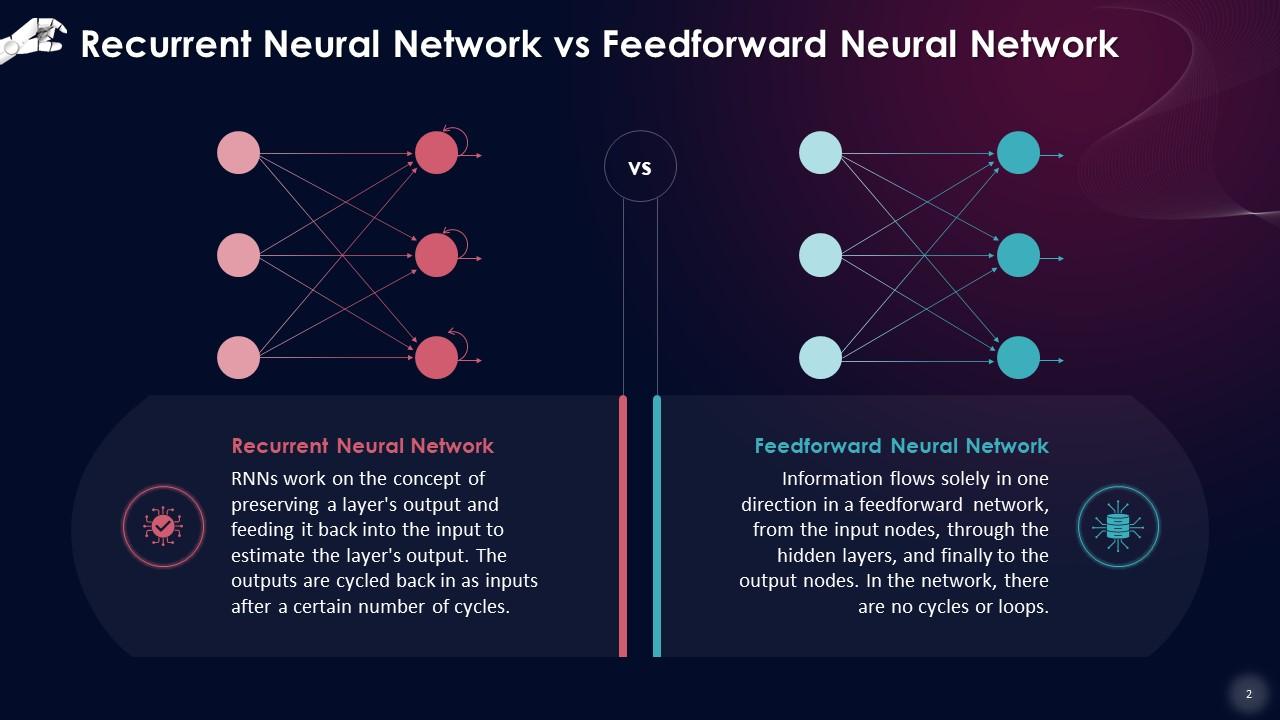

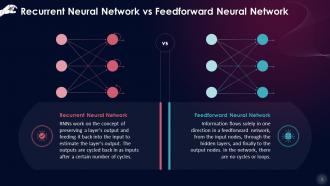

Slide 2

This slide defines a Recurrent Neural Network and a Feedforward Neural Network. RNNs work on the concept of preserving a layer's output and feeding it back into the input to estimate the layer's output.The outputs are cycled back in as inputs after a certain number of cycles. Information flows solely in one direction in a feedforward network, from the input nodes, through the hidden layers, and finally to the output nodes.

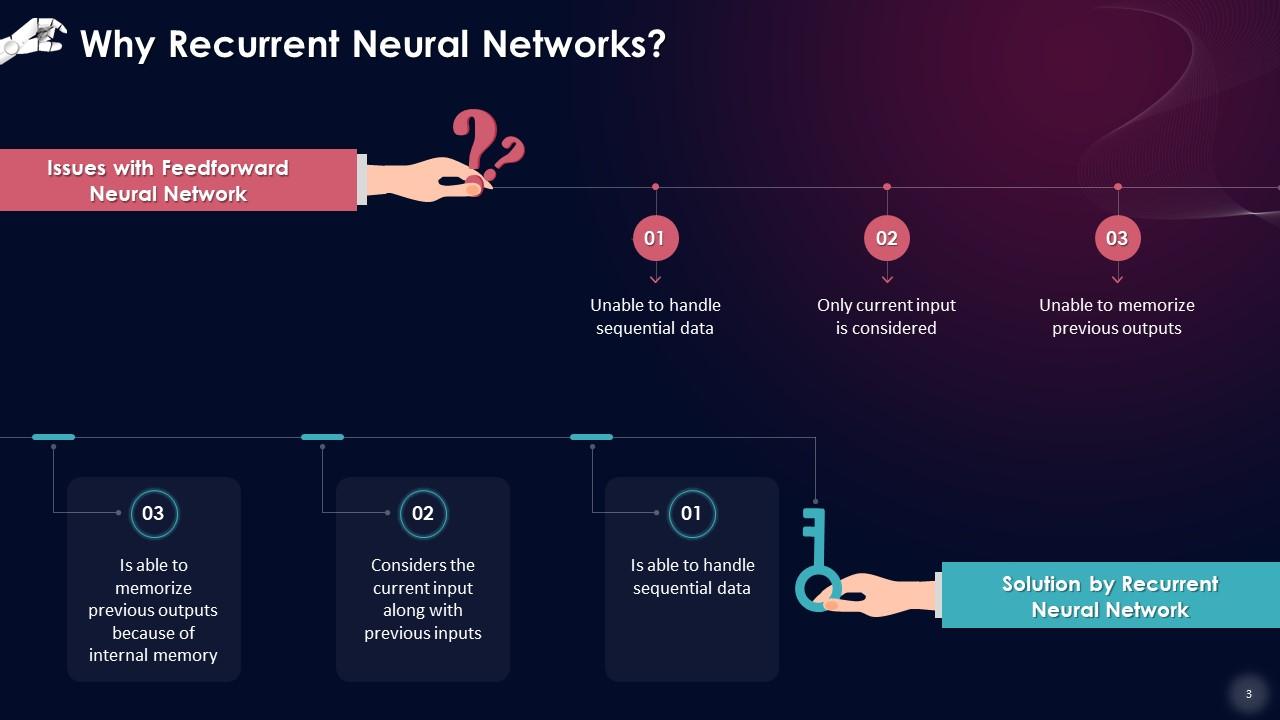

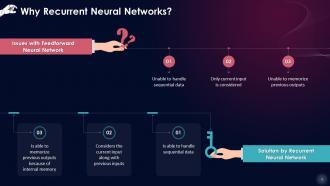

Slide 3

This slide draws a comparison between a Recurrent Neural Network and a Feedforward Neural Network by presenting solutions that RNNs bring to the issues faced by feedforward networks.

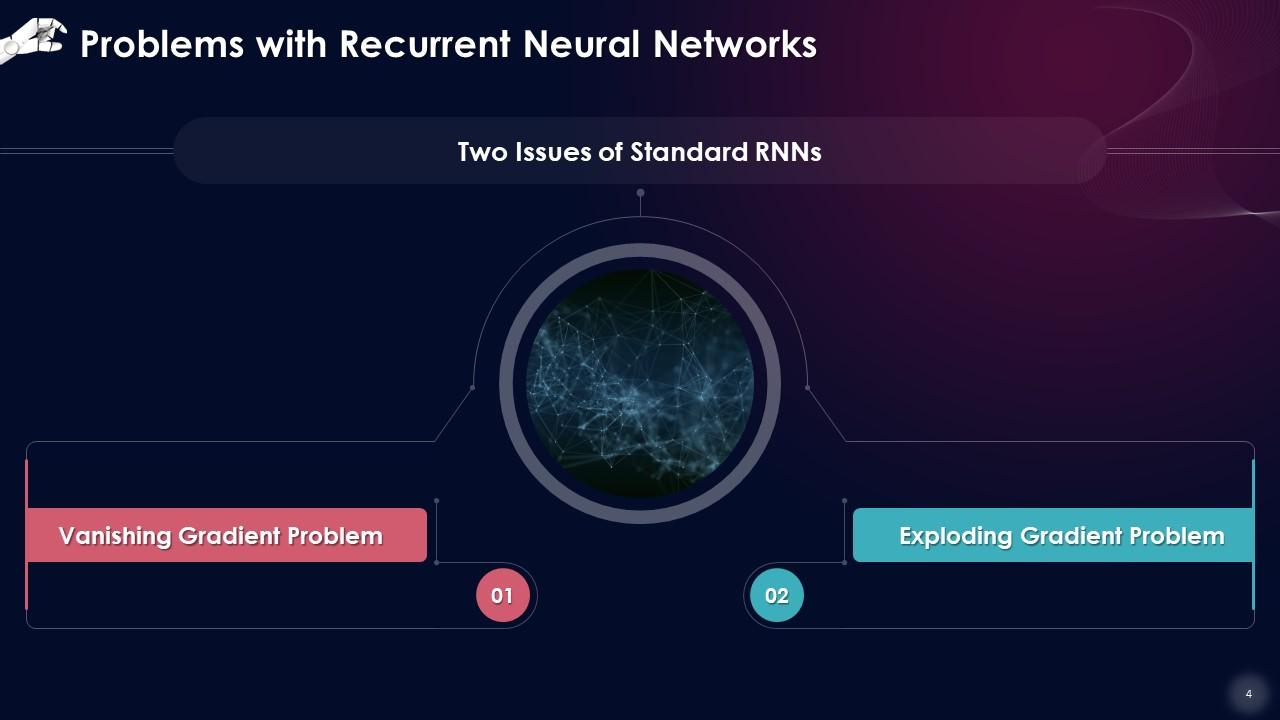

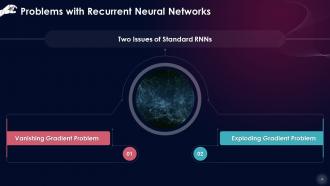

Slide 4

This slide talks about the two issues that standard RNNs present. These are the vanishing gradient problem, and exploding gradient problem.

Instructor’s Notes:

- Vanishing Gradient Problem: Gradients of the loss function approach zero when more layers with certain activation functions are added to neural networks, which makes the network difficult to train.

- Exploding Gradient Problem: An Exploding Gradient occurs when the slope of a neural network grows exponentially instead of diminishing after training. This problem occurs when significant error gradients build up, resulting in extensive modifications to the neural network model weights during training. The main challenges in gradient problems are extended training times, low performance, and poor accuracy

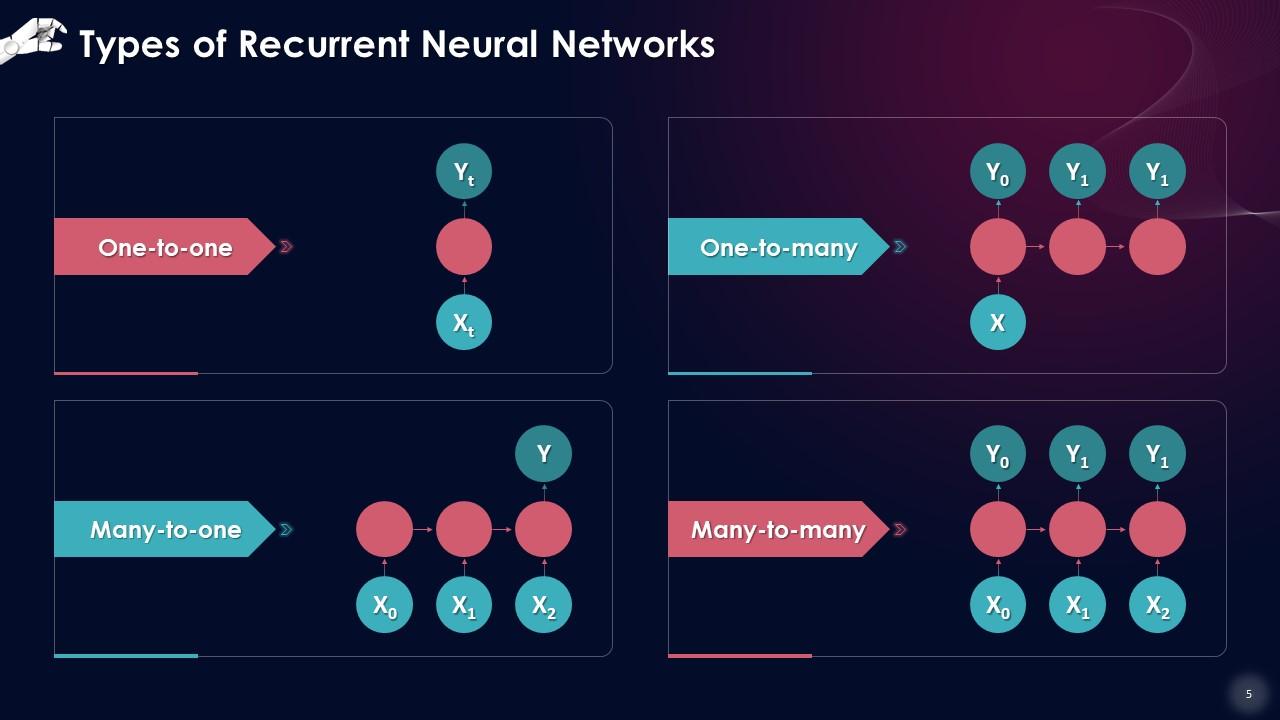

Slide 5

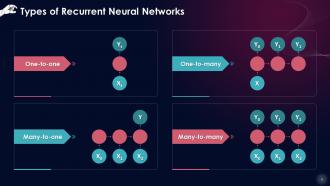

This slide lists types of Recurrent Neural Networks. These include one-to-one, one-to-many, many-to-one, many-to-many.

Slide 6

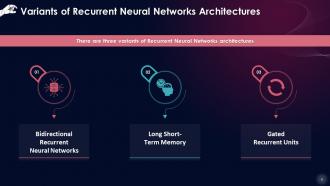

This slide depicts three variants of Recurrent Neural Networks architectures. These include bidirectional recurrent neural networks, long short-term memory, and gated recurrent units.

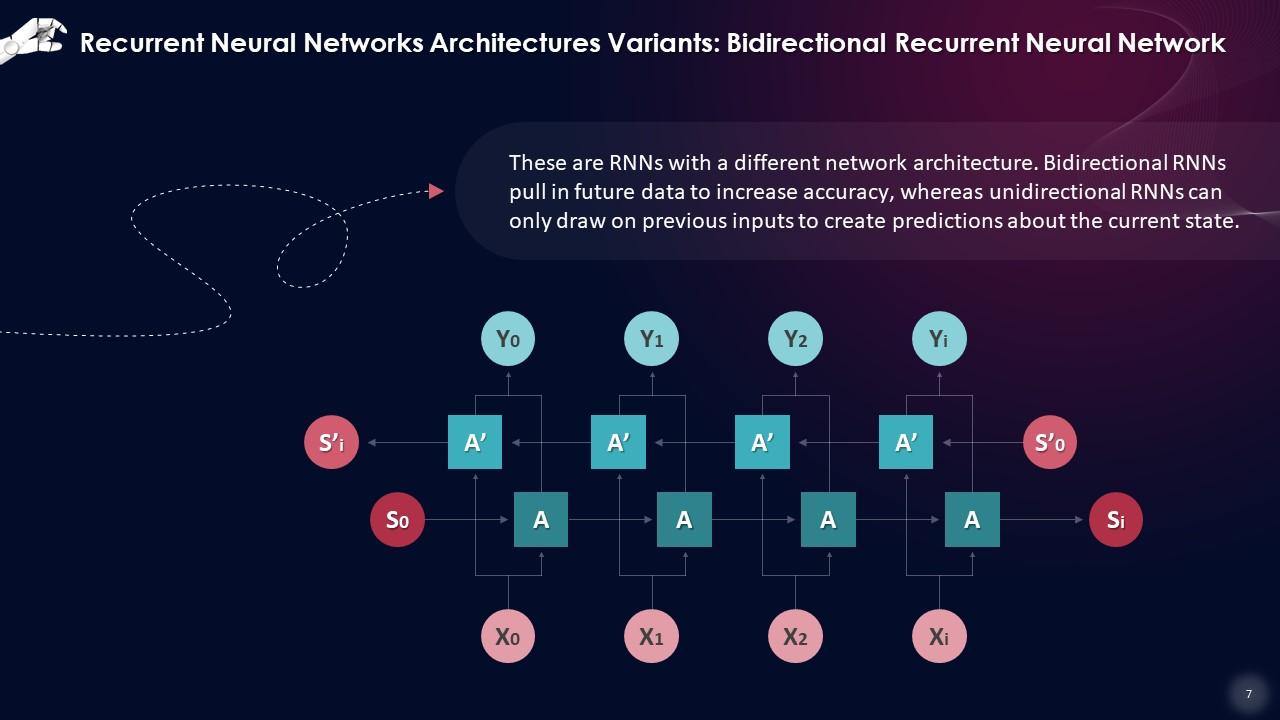

Slide 7

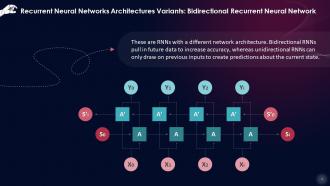

This slide talks about Bidirectional Recurrent Neural Network as an architecture. Bidirectional RNNs pull in future data to increase accuracy, whereas unidirectional RNNs can only draw on previous inputs to create predictions about the current state.

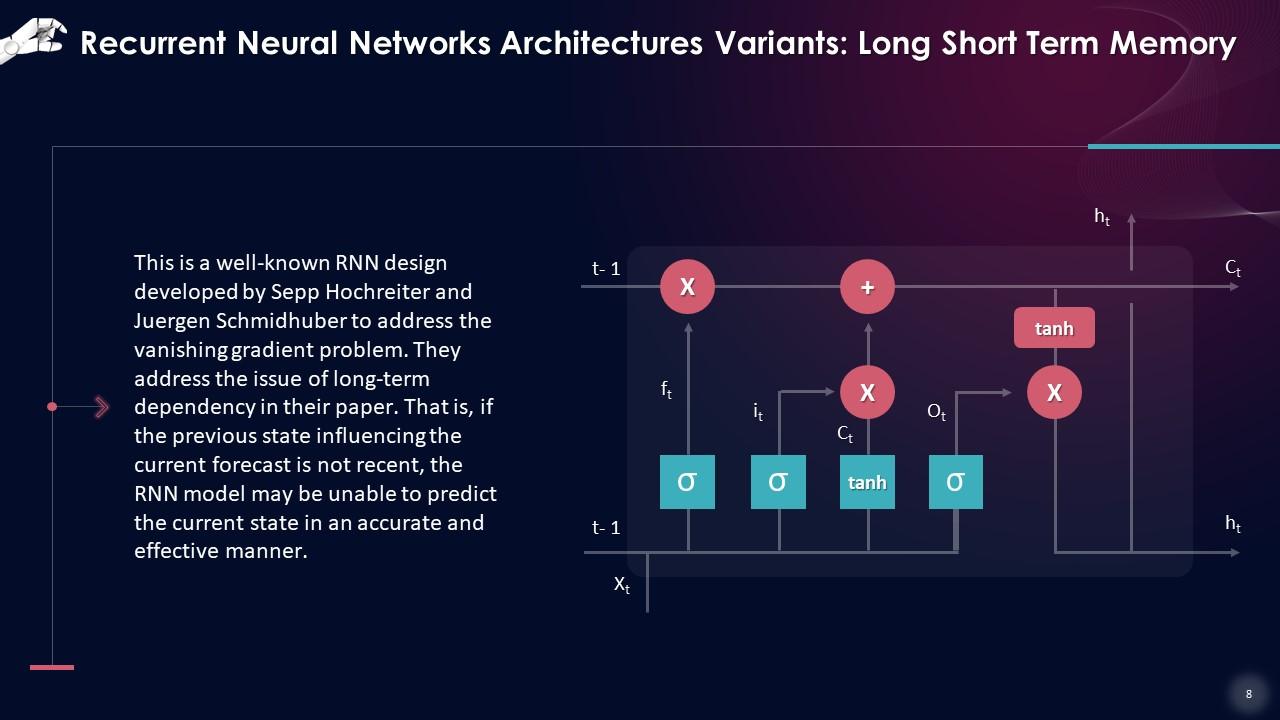

Slide 8

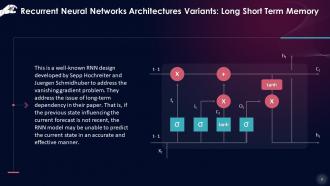

This slide gives information about Long Short Term Memory as an architecture. LSTM is a well-known RNN design developed by Sepp Hochreiter and Juergen Schmidhuber to address the vanishing gradient problem.

Instructor’s Notes: In the deep layers of the neural network, LSTMs have "cells" that have three gates: input gate, output gate, and forget gate. These gates regulate the flow of data required to forecast the network's output.

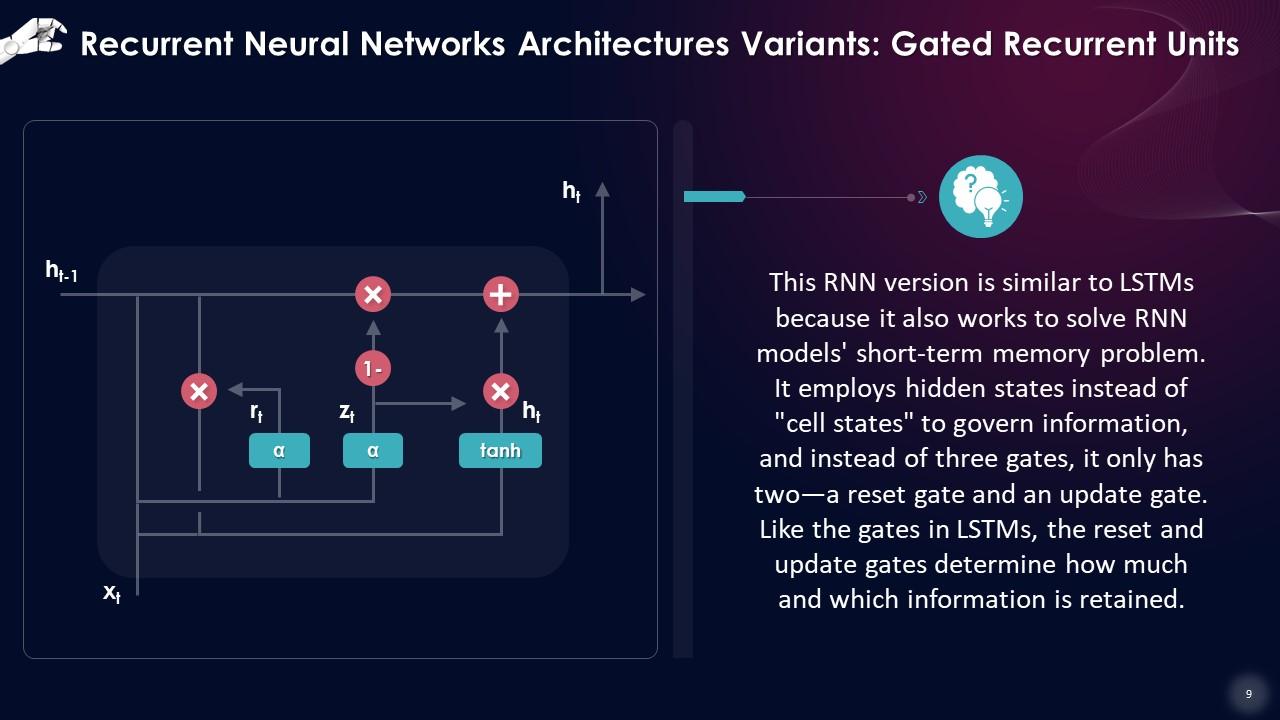

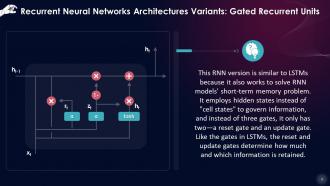

Slide 9

This slide talks about Gated Recurrent Units as an architecture. This RNN version is similar to LSTMs because it also works to solve RNN models' short-term memory problem. It employs hidden states instead of "cell states" to govern information, and instead of three gates, it only has two—a reset gate and an update gate.

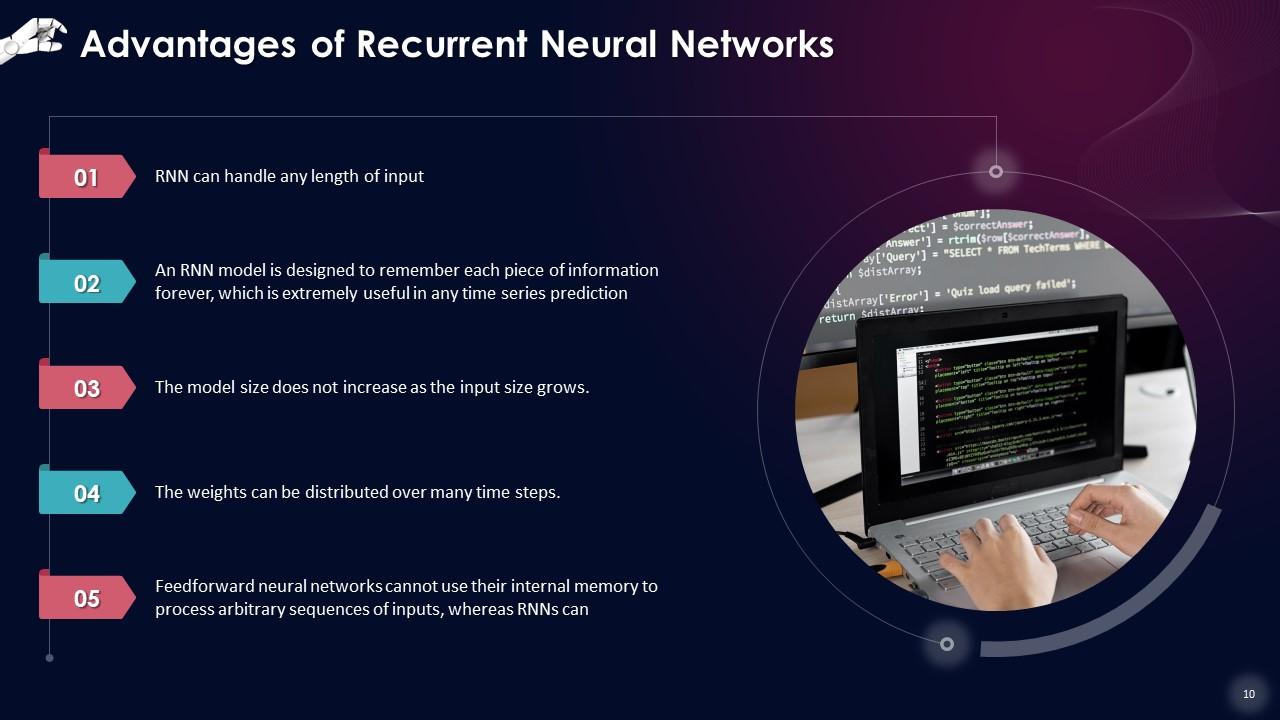

Slide 10

This slide lists advantages of Recurrent Neural Networks. These benefits are that RNNs can handle any length of input, they remember information, the model size does not increase as the input size grows, the weights can be distributed, etc.

Slide 11

This slide lists disadvantages of Recurrent Neural Networks. The drawbacks are that the computation is slow, models can be challenging to train, and exploding and vanishing gradient problems are common.

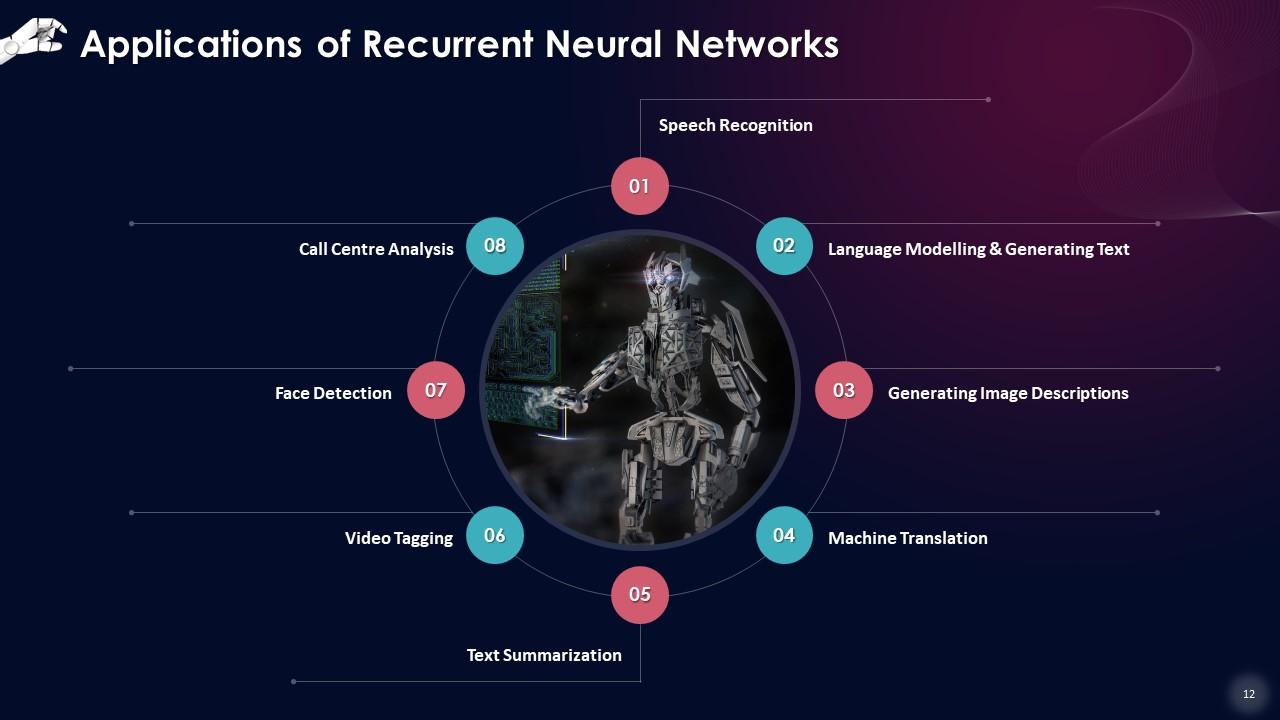

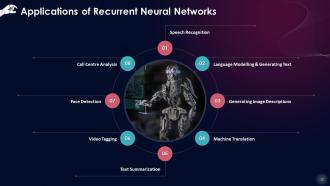

Slide 12

This slide lists applications of Recurrent Neural Networks. These are speech recognition, language modelling & generating text, generating image descriptions, machine translation, text summarization, video tagging, face detection, and call centre analysis.

Instructor’s Notes:

- Speech Recognition: When sound waves from a medium are employed as an input source, RNNs can be used to forecast phonetic segments. The set of inputs comprises phonemes or acoustic signals from an audio file that have been adequately processed and used as inputs

- Language Modelling & Generating: RNNs try to anticipate the potential of the next word using a sequence of words as input. This is one of the most helpful ways of translation since the most likely sentence will be the correct one. The likelihood of a given time-output step is used to sample the words in the next iteration in this method

- Generating Image Descriptions: A combination of CNNs and RNNs is used to describe what is happening inside an image. The segmentation is done using CNN, and the description is recreated by RNN using the segmented data

- Machine Translation: RNNs can be used to translate text from one language to another in one way or the other. Almost every translation system in use today employs some form of advanced RNN. The source language can be used as the input, and the output will be in the user's preferred target language

- Text Summarization: This application can help a lot with summarising content from books and customizing it for distribution in applications that can't handle vast amounts of text

- Video tagging: RNNs can be used in video search to describe the visual of a video divided into many frames

- Face Detection: Image recognition is one of the simplest RNNs to understand. The method is built around taking one unit of an image as input and producing the image's description in several groups of output

- Call Centre Analysis: The entire procedure can be automated if RNNs are used to process and synthesize actual speech from the call for analysis purposes

Core Concepts Of Recurrent Neural Networks Training Ppt with all 28 slides:

Use our Core Concepts Of Recurrent Neural Networks Training Ppt to effectively help you save your valuable time. They are readymade to fit into any presentation structure.

-

SlideTeam is my one-stop solution for all the presentation needs. Their templates have beautiful designs that are worth every penny!

-

Thank you for offering such fantastic custom design services. The team is really helpful and innovative. In a very short time, I received my personalized template.