Understanding Gradient Descent Training Ppt

These slides explain the concept of gradient descent, an optimization process used in Machine Learning algorithms to minimize the cost function, mainly used to update the learning models parameters. They also discuss its types, including batch gradient descent, stochastic gradient descent, and mini-batch gradient descent.

These slides explain the concept of gradient descent, an optimization process used in Machine Learning algorithms to minimi..

- Google Slides is a new FREE Presentation software from Google.

- All our content is 100% compatible with Google Slides.

- Just download our designs, and upload them to Google Slides and they will work automatically.

- Amaze your audience with SlideTeam and Google Slides.

-

Want Changes to This PPT Slide? Check out our Presentation Design Services

- WideScreen Aspect ratio is becoming a very popular format. When you download this product, the downloaded ZIP will contain this product in both standard and widescreen format.

-

- Some older products that we have may only be in standard format, but they can easily be converted to widescreen.

- To do this, please open the SlideTeam product in Powerpoint, and go to

- Design ( On the top bar) -> Page Setup -> and select "On-screen Show (16:9)” in the drop down for "Slides Sized for".

- The slide or theme will change to widescreen, and all graphics will adjust automatically. You can similarly convert our content to any other desired screen aspect ratio.

Compatible With Google Slides

Get This In WideScreen

You must be logged in to download this presentation.

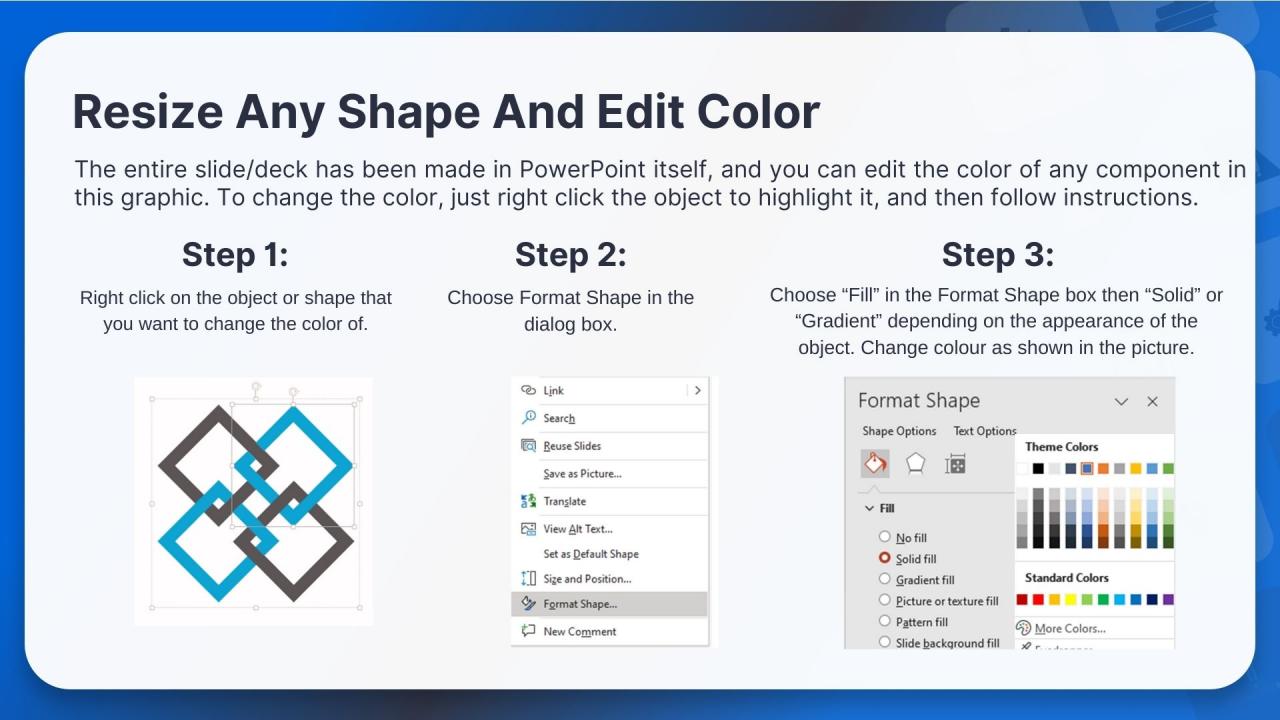

PowerPoint presentation slides

Presenting Understanding Gradient Descent. These slides are 100 percent made in PowerPoint and are compatible with all screen types and monitors. They also support Google Slides. Premium Customer Support available. Suitable for use by managers, employees, and organizations. These slides are easily customizable. You can edit the color, text, icon, and font size to suit your requirements.

People who downloaded this PowerPoint presentation also viewed the following :

Content of this Powerpoint Presentation

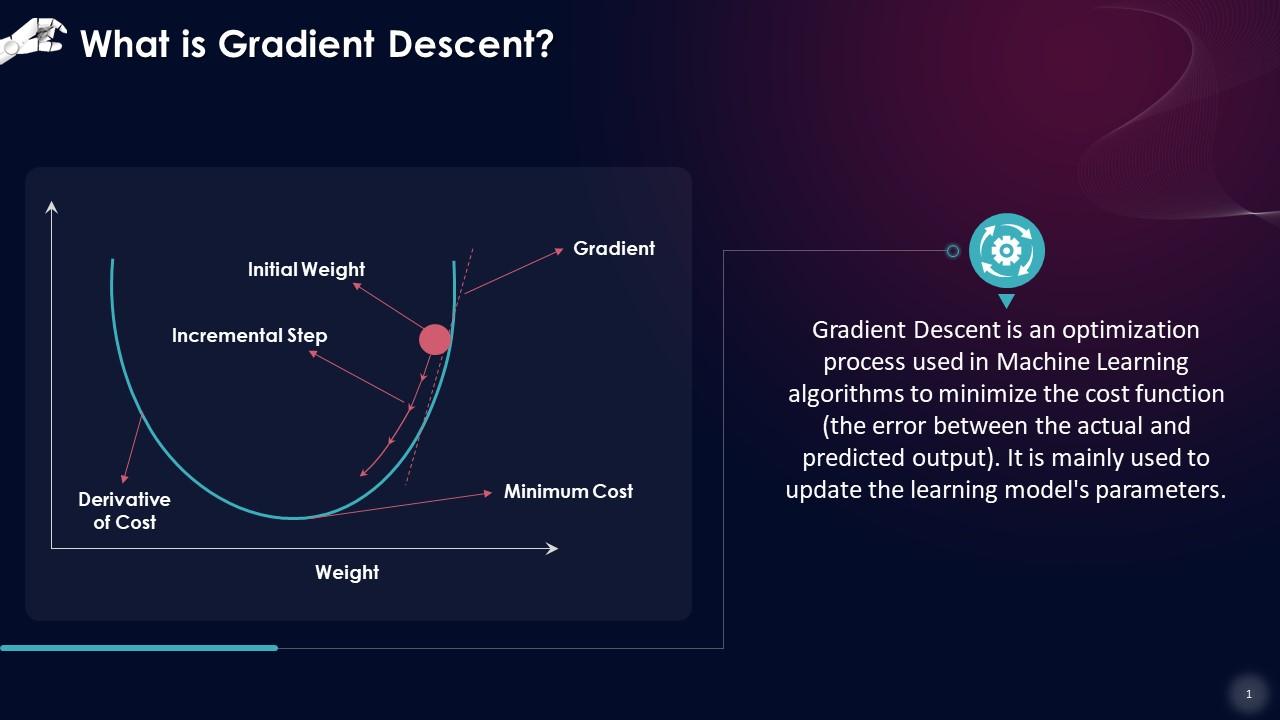

Slide 1

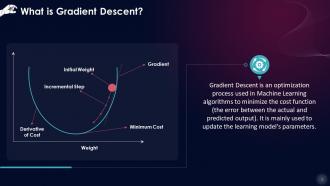

This slide introduces the concept of gradient descent. Gradient Descent is an optimization process used in Machine Learning algorithms to minimize the cost function (the error between the actual and predicted output). It is mainly used to update the learning model's parameters.

Slide 2

This slide lists types of gradient descent. These include batch gradient descent, stochastic gradient descent, and mini-batch gradient descent.

Instructor’s Notes:

- Batch Gradient Descent: Batch gradient descent adds the errors for each point in a training set before updating the model after all training instances have been reviewed. This process is known as the Training Epoch. Batch gradient descent usually gives a steady error gradient and convergence, although choosing the local minimum rather than the global minimum isn't always the best solution

- Stochastic Gradient Descent: Stochastic gradient descent creates a training epoch for each example in the dataset and changes the parameters of each training example, sequentially. These frequent updates can provide greater detail and speed, but they can also produce noisy gradients, which can aid in surpassing the local minimum and locating the global one

- Mini-Batch Gradient Descent: Mini-batch gradient descent combines the principles of both batch gradient descent with stochastic gradient descent. It divides the training dataset into distinct groups and updates them separately. This method balances batch gradient descent's computing efficiency and stochastic gradient descent's speed

Understanding Gradient Descent Training Ppt with all 18 slides:

Use our Understanding Gradient Descent Training Ppt to effectively help you save your valuable time. They are readymade to fit into any presentation structure.

-

SlideTeam is my go-to resource for professional PPT templates. They have an exhaustive library, giving you the option to download the best slide!

-

“The presentation template I got from you was a very useful one.My presentation went very well and the comments were positive.Thank you for the support. Kudos to the team!”