Deep Learning Mastering The Fundamentals Training Ppt

This training module on Mastering the Fundamentals of Deep Learning gives in-depth knowledge about the importance and working of deep learning. It also compares deep learning and machine learning and comprehensively covers deep learning functions Sigmoid Activation Function, Hyperbolic Tangent Function, ReLu, Loss Functions, Optimizer Functions. It dives into the process of deep learning, highlighting its advantages, limitations, and applications. It also includes Key Takeaways and Discussion Questions related to the topic to make the training session more interactive. The deck has PPT slides on About Us, Vision, Mission, Goal, 30-60-90 Days Plan, Timeline, Roadmap, Training Completion Certificate, and Energizer Activities. It also includes a Client Proposal and Assessment Form for training evaluation. Get access now to our artificial intelligence and machine learning ppt now.

This training module on Mastering the Fundamentals of Deep Learning gives in-depth knowledge about the importance and worki..

- Google Slides is a new FREE Presentation software from Google.

- All our content is 100% compatible with Google Slides.

- Just download our designs, and upload them to Google Slides and they will work automatically.

- Amaze your audience with SlideTeam and Google Slides.

-

Want Changes to This PPT Slide? Check out our Presentation Design Services

- WideScreen Aspect ratio is becoming a very popular format. When you download this product, the downloaded ZIP will contain this product in both standard and widescreen format.

-

- Some older products that we have may only be in standard format, but they can easily be converted to widescreen.

- To do this, please open the SlideTeam product in Powerpoint, and go to

- Design ( On the top bar) -> Page Setup -> and select "On-screen Show (16:9)” in the drop down for "Slides Sized for".

- The slide or theme will change to widescreen, and all graphics will adjust automatically. You can similarly convert our content to any other desired screen aspect ratio.

Compatible With Google Slides

Get This In WideScreen

You must be logged in to download this presentation.

PowerPoint presentation slides

Presenting Training Deck on Deep Learning Mastering the Fundamentals. This deck comprises of 104 slides. Each slide is well crafted and designed by our PowerPoint experts. This PPT presentation is thoroughly researched by the experts, and every slide consists of appropriate content. All slides are customizable. You can add or delete the content as per your need. Not just this, you can also make the required changes in the charts and graphs. Download this professionally designed business presentation, add your content, and present it with confidence.

People who downloaded this PowerPoint presentation also viewed the following :

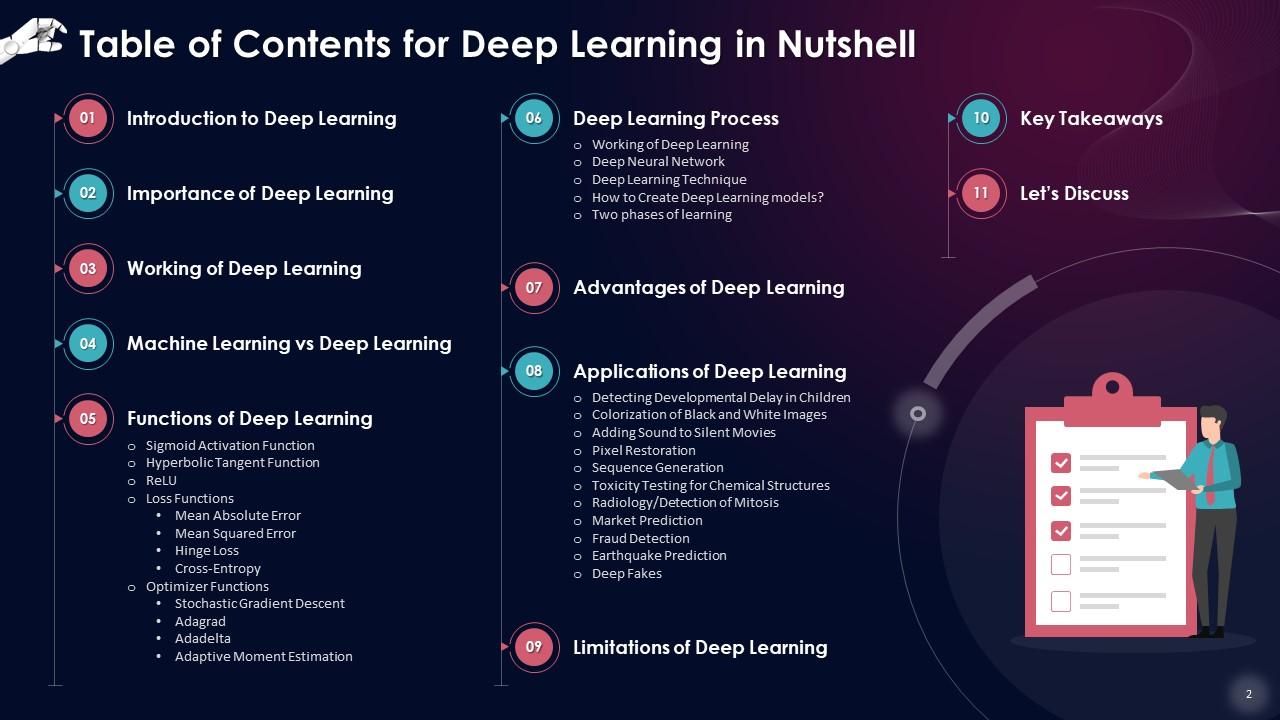

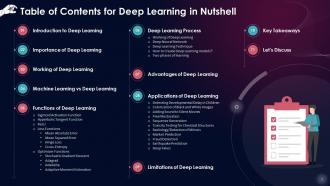

Content of this Powerpoint Presentation

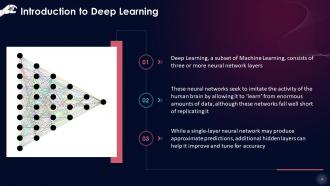

Slide 4

This slide states that Deep Learning, a subset of Machine Learning, consists of three or more neural network layers. These neural networks seek to imitate the activity of the human brain by allowing it to ‘learn’ from enormous amounts of data, although these networks fall well short of replicating it. While a single-layer neural network may produce approximate predictions, additional hidden layers can help it improve and tune for accuracy.

Instructor Notes:

Most Artificial Intelligence (AI) applications and services rely on Deep Learning to improve automation by executing analytical and physical tasks without humans. Deep Learning is used in both common products and services (such as digital assistants, voice-enabled TV remote controls, and credit card fraud detection) and new technologies (such as Artificial Intelligence and self-driving cars).

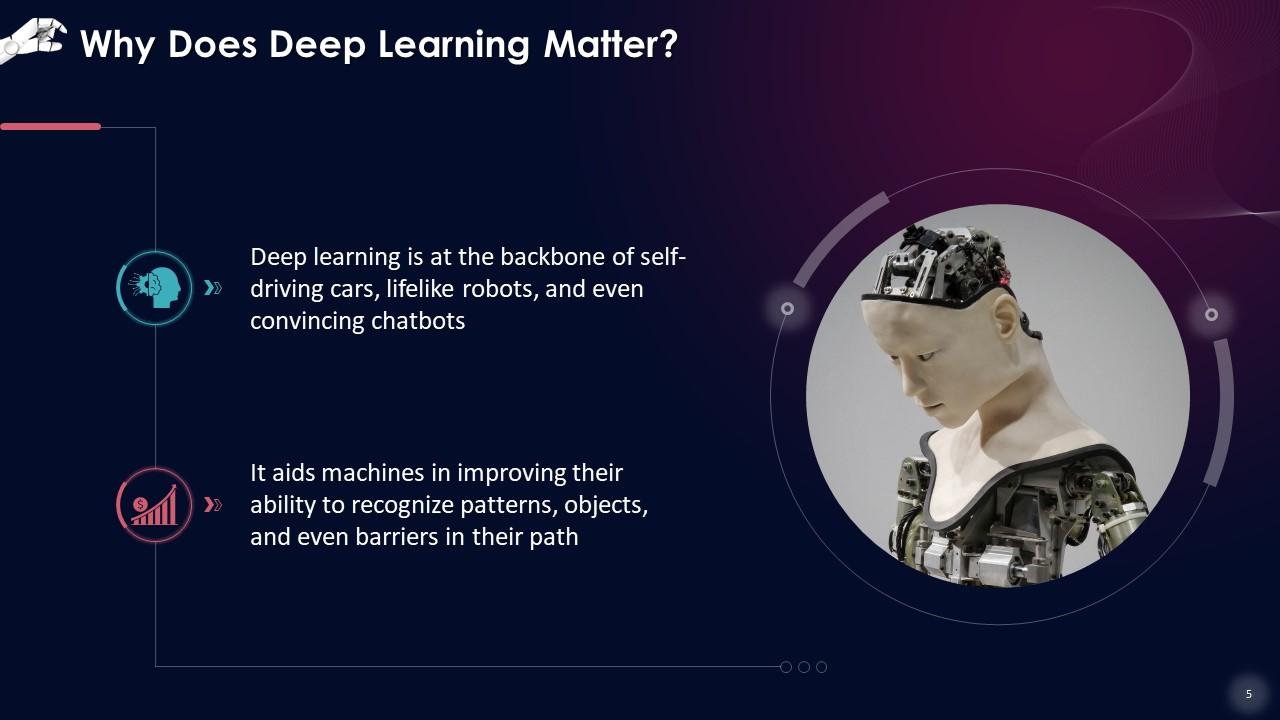

Slide 5

This slide gives an overview of Deep Learning which is the backbone of self-driving cars, lifelike robots, and even convincing chatbots. It aids machines in improving their ability to recognize patterns, objects, and even barriers in their path.

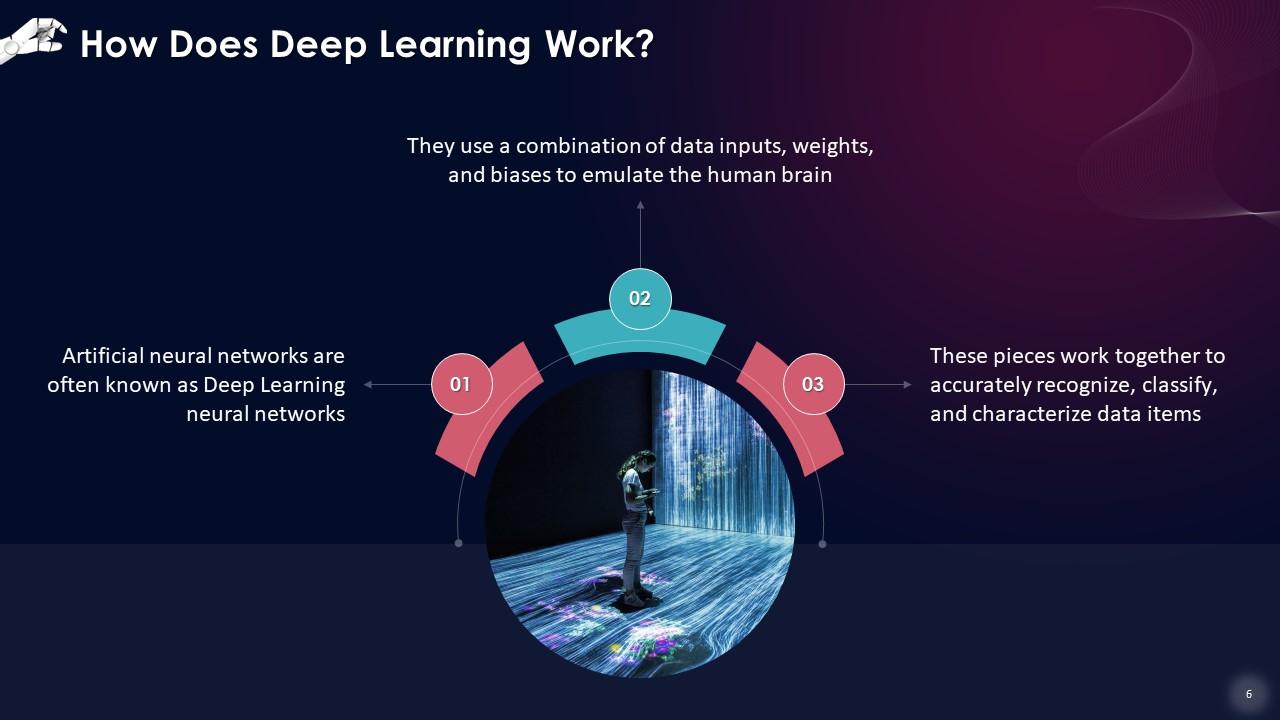

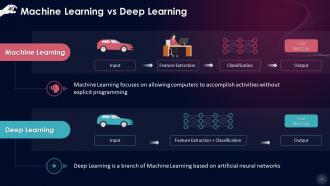

Slide 6

This slide states that artificial neural networks are often known as Deep Learning neural networks. They use a combination of data inputs, weights, and biases to emulate the human brain. These pieces work together to accurately recognize, classify, and characterize data items.

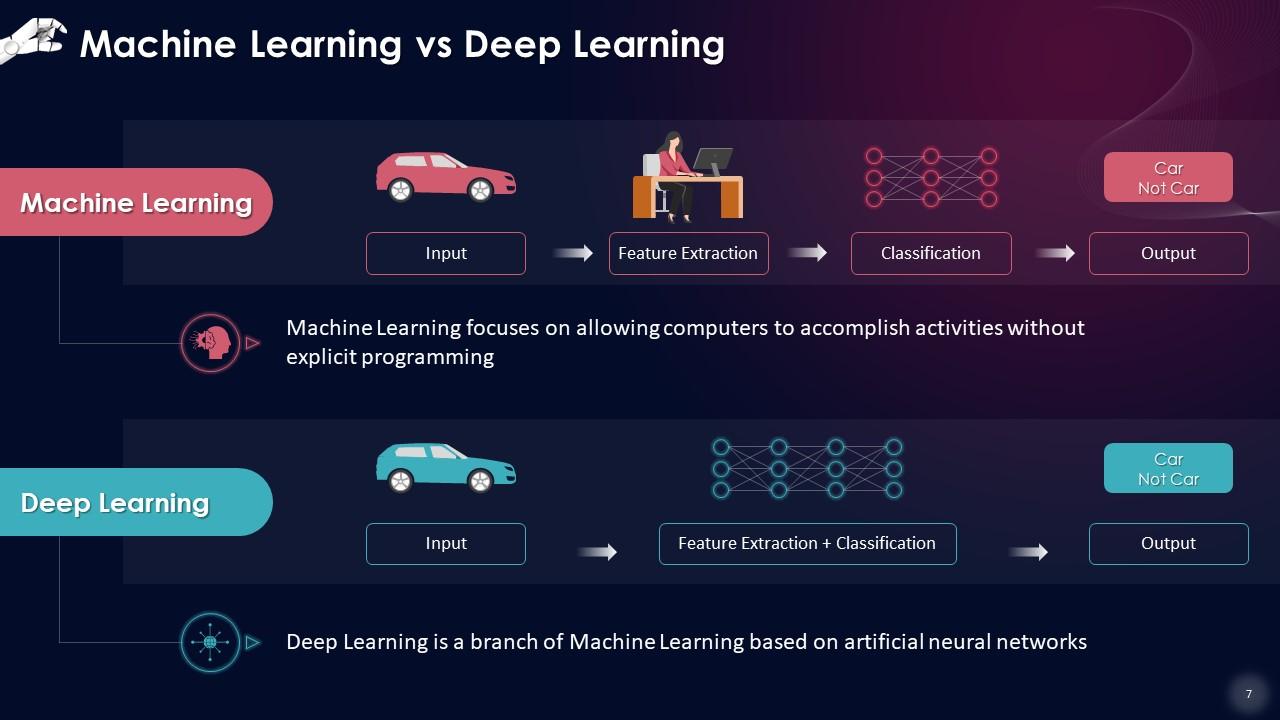

Slide 7

This slide lists that Machine Learning focuses on allowing computers to accomplish activities without explicit programming. Deep Learning is a branch of Machine Learning based on artificial neural networks.

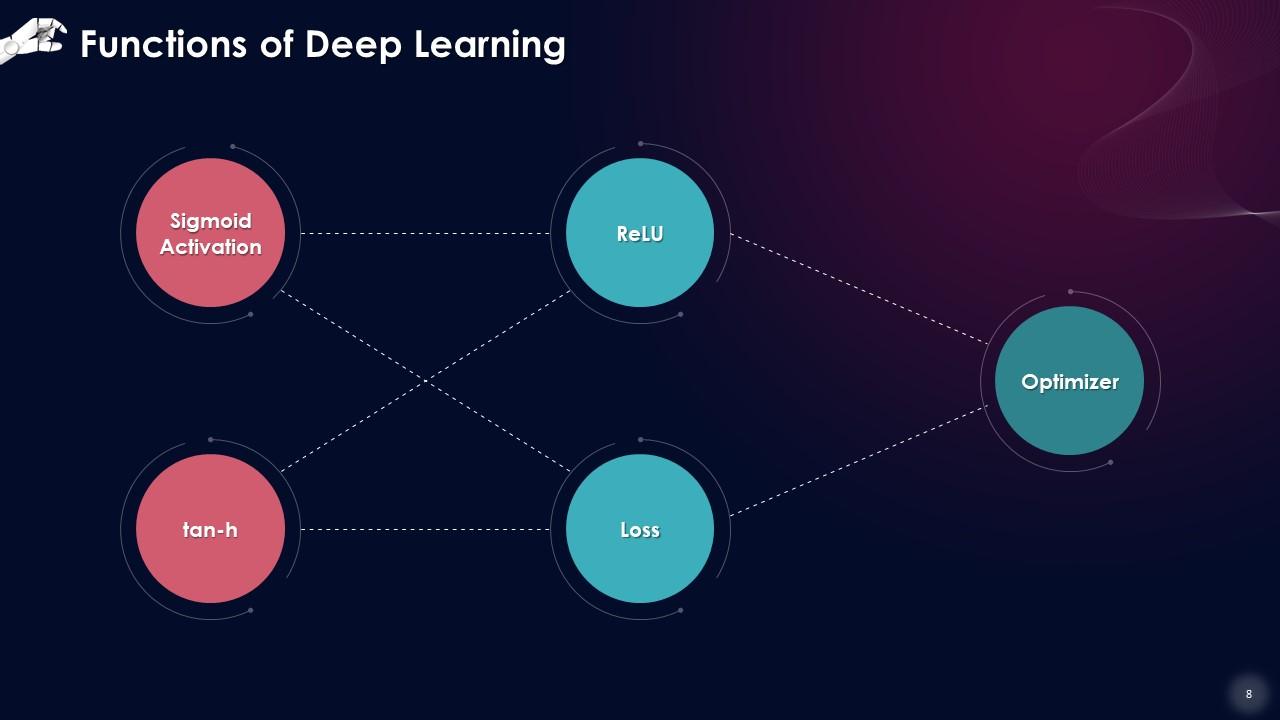

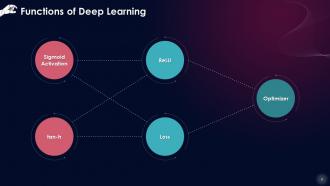

Slide 8

This slide states multiple types of Deep Learning functions: Sigmoid Activation Function, tan-h (Hyperbolic Tangent Function), ReLU (Rectified Linear Units), Loss Functions, and Optimizer Functions.

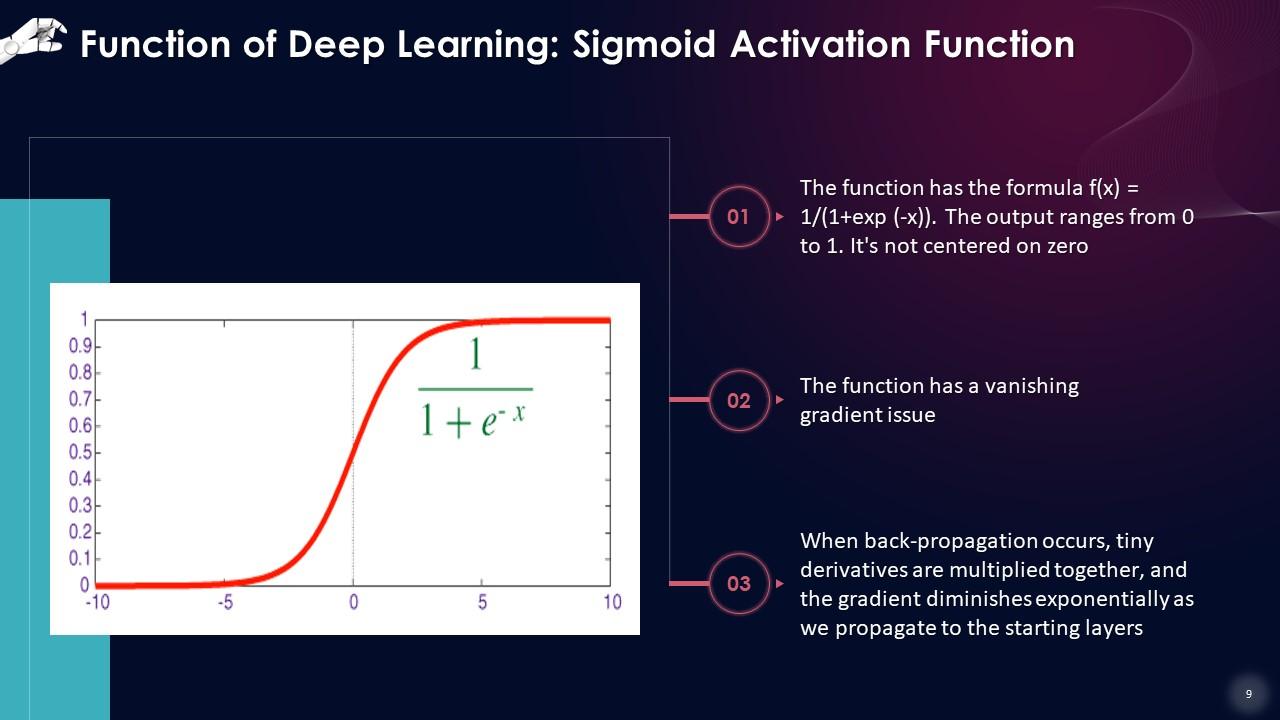

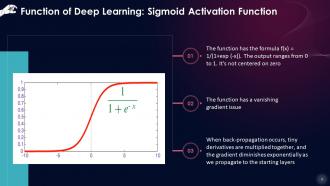

Slide 9

This slide gives an overview of sigmoid activation function which has the formula f(x) = 1/(1+exp (-x)). The output ranges from 0 to 1. It's not centered on zero. The function has a vanishing gradient issue. When back-propagation occurs, tiny derivatives are multiplied together, and the gradient diminishes exponentially as we propagate to the starting layers.

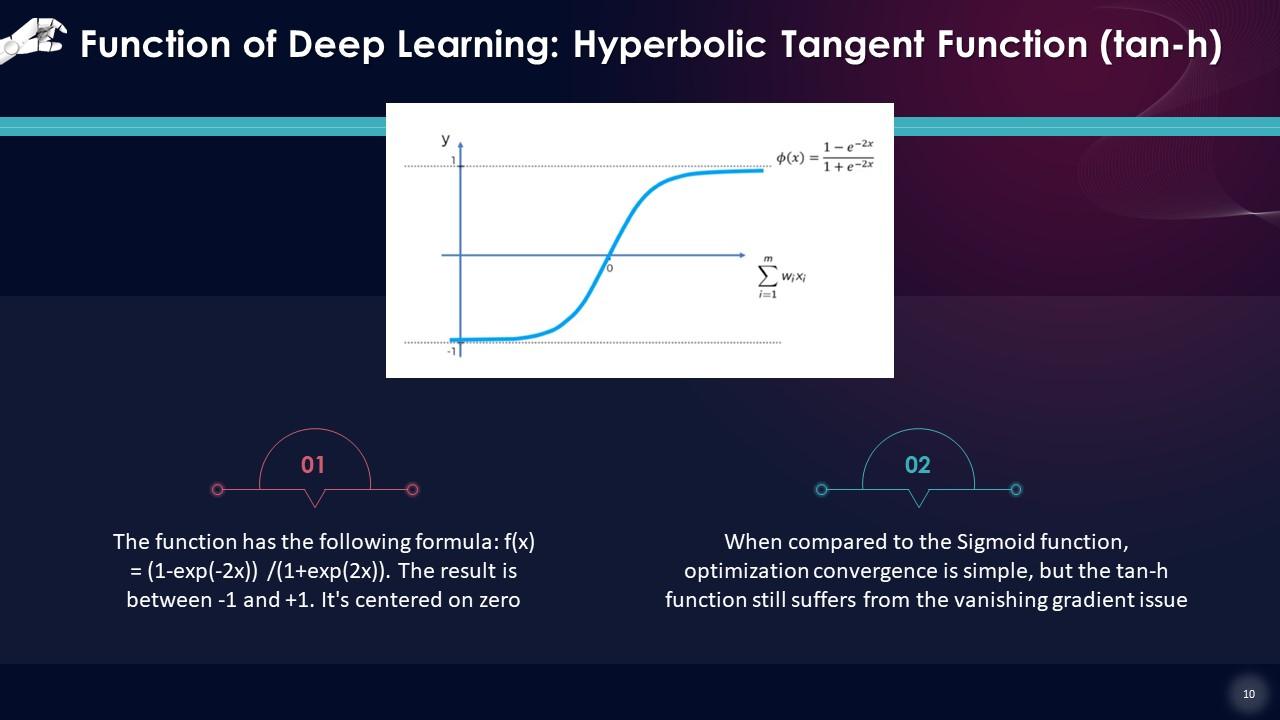

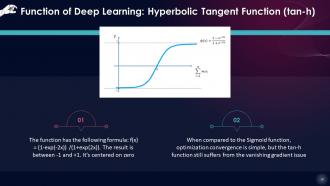

Slide 10

This slide states that Hyperbolic Tangent function has the following formula: f(x) = (1-exp(-2x))/(1+exp(2x)). The result is between -1 and +1. It's centered on zero. When compared to the Sigmoid function, optimization convergence is simple, but the tan-h function still suffers from the vanishing gradient issue.

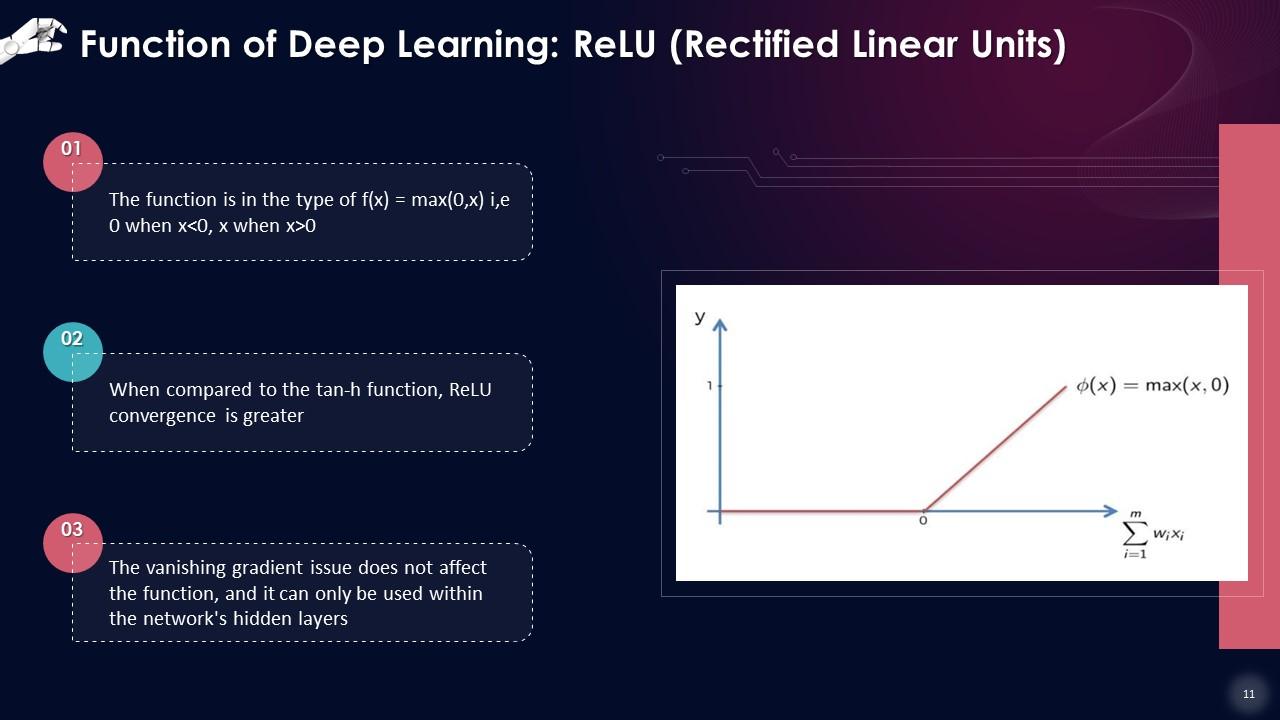

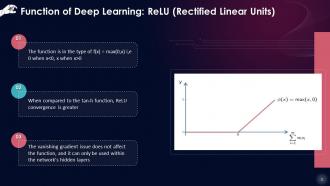

Slide 11

This slide gives an overview of ReLU (Rectified Linear Units). The function is in the type of f(x) = max(0,x) i,e 0 when x<0, x when x>0. When compared to the tan-h function, ReLU convergence is greater. The vanishing gradient issue does not affect the function, and it can only be used within the network's hidden layers

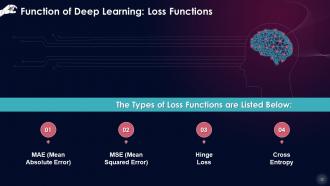

Slide 12

This slide lists the types of loss functions as a component of Deep Learning. These include mean absolute error, mean squared error, hinge loss, and cross-entropy.

Slide 13

This slide states that mean absolute error is a statistic for calculating the absolute difference between expected and actual values. Divide the total of all absolute differences by the number of observations. It does not penalize large values as harshly as Mean Squared Error (MSE).

Slide 14

This slide describes that MSE is determined by summing the squares of the difference between expected and actual values and dividing by the number of observations. It is necessary to pay attention when the metric value is higher or lower. It is only applicable when we have unexpected values for forecasts. We cannot rely on MSE since it might increase while the model performs well.

Slide 15

This slide explains that hinge loss function is commonly seen in support vector machines. The function has the shape = max[0,1-yf(x)]. When yf(x)>=0, the loss function is 0, but when yf(x)<0 the error rises exponentially, penalizing misclassified points that are far from the margin disproportionately. As a result, the inaccuracy would grow exponentially to those points.

Slide 16

This slide states that cross-entropy is a log function that predicts values ranging from 0 to 1. It assesses the effectiveness of a classification model. As a result, when the value is 0.010, the cross-entropy loss is more significant, and the model performs poorly on prediction.

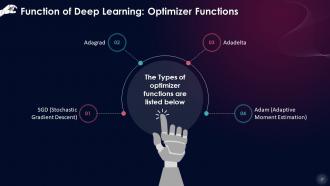

Slide 17

This slide lists optimizer functions as a part of Deep Learning. These include stochastic gradient descent, adagrad, adadelta and adam (adaptive moment estimation).

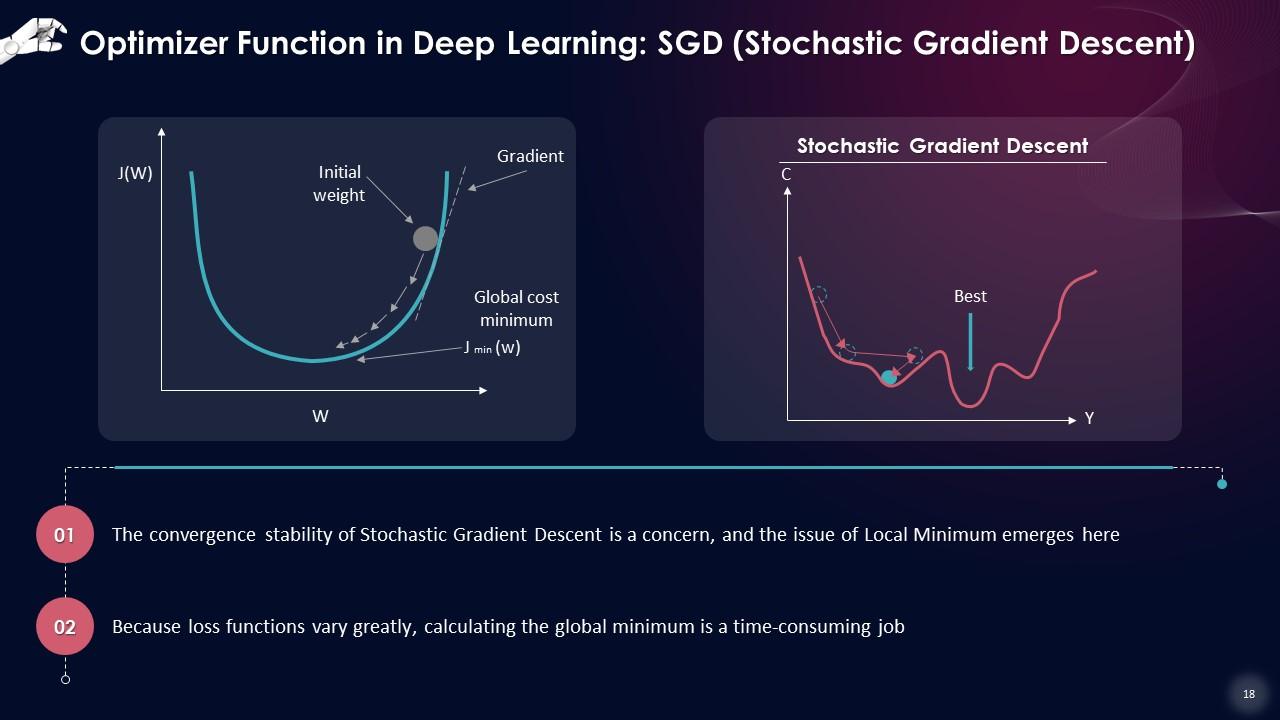

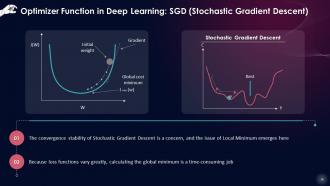

Slide 18

This slide states that the convergence stability of Stochastic Gradient Descent is a concern, and the issue of Local Minimum emerges here. With loss functions varying greatly, calculating the global minimum is time-consuming.

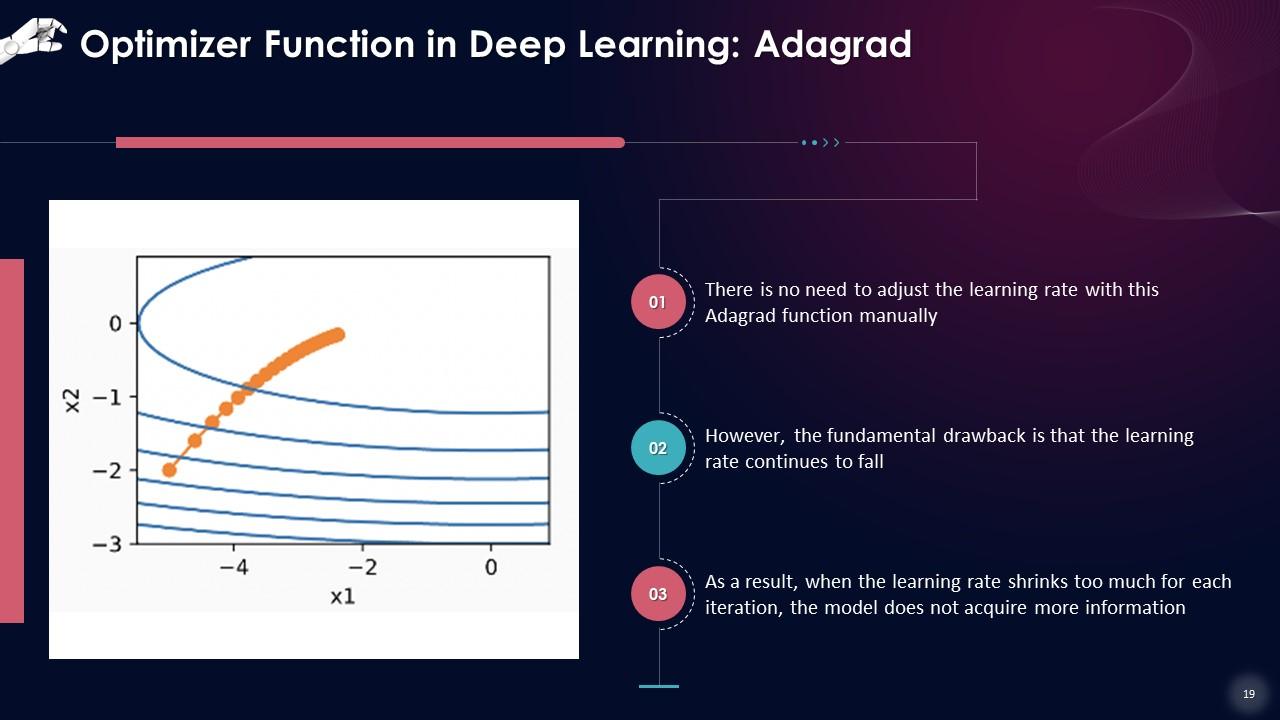

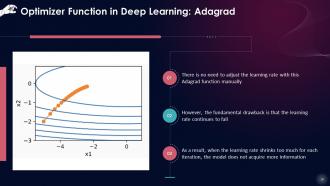

Slide 19

This slide states that there is no need to adjust the learning rate with this Adagrad function manually. However, the fundamental drawback is that the learning rate continues to fall. As a result, when the learning rate shrinks too much for each iteration, the model does not acquire more information.

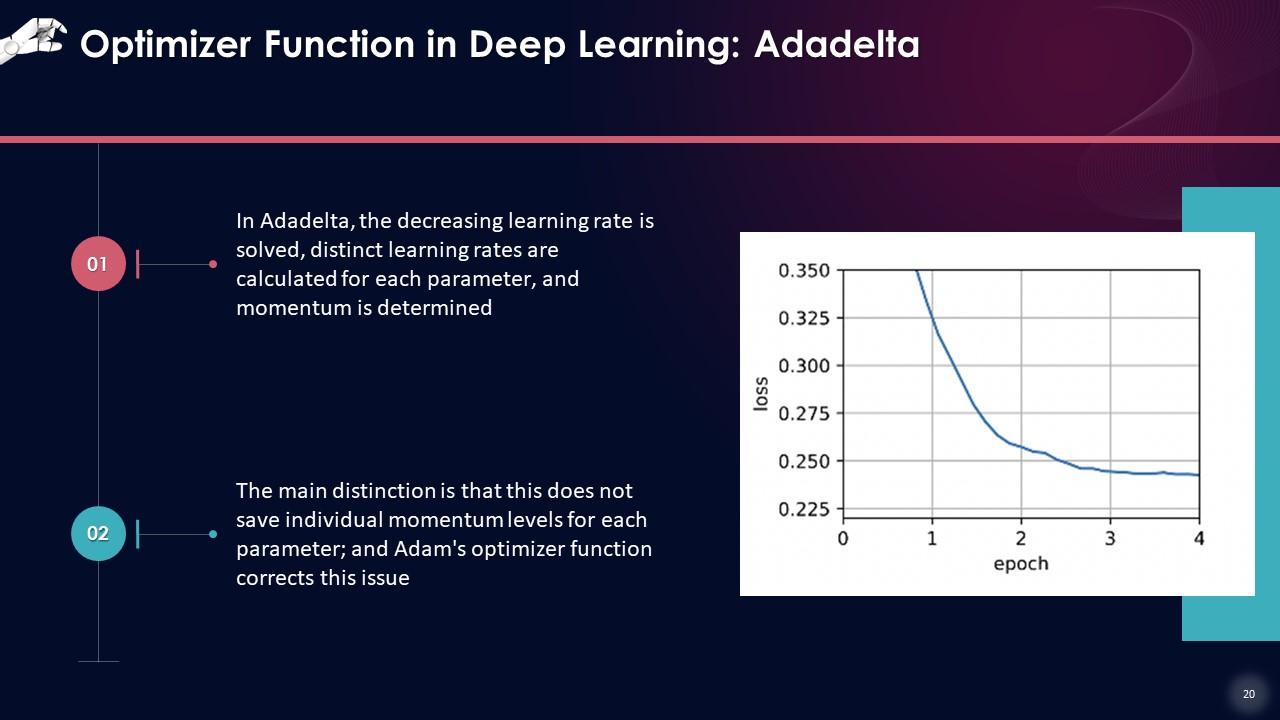

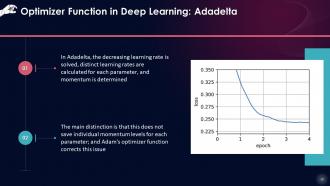

Slide 20

This slide states that in adadelta, the decreasing learning rate is solved, distinct learning rates are calculated for each parameter, and momentum is determined. The main distinction is that this does not save individual momentum levels for each parameter; and Adam's optimizer function corrects this issue.

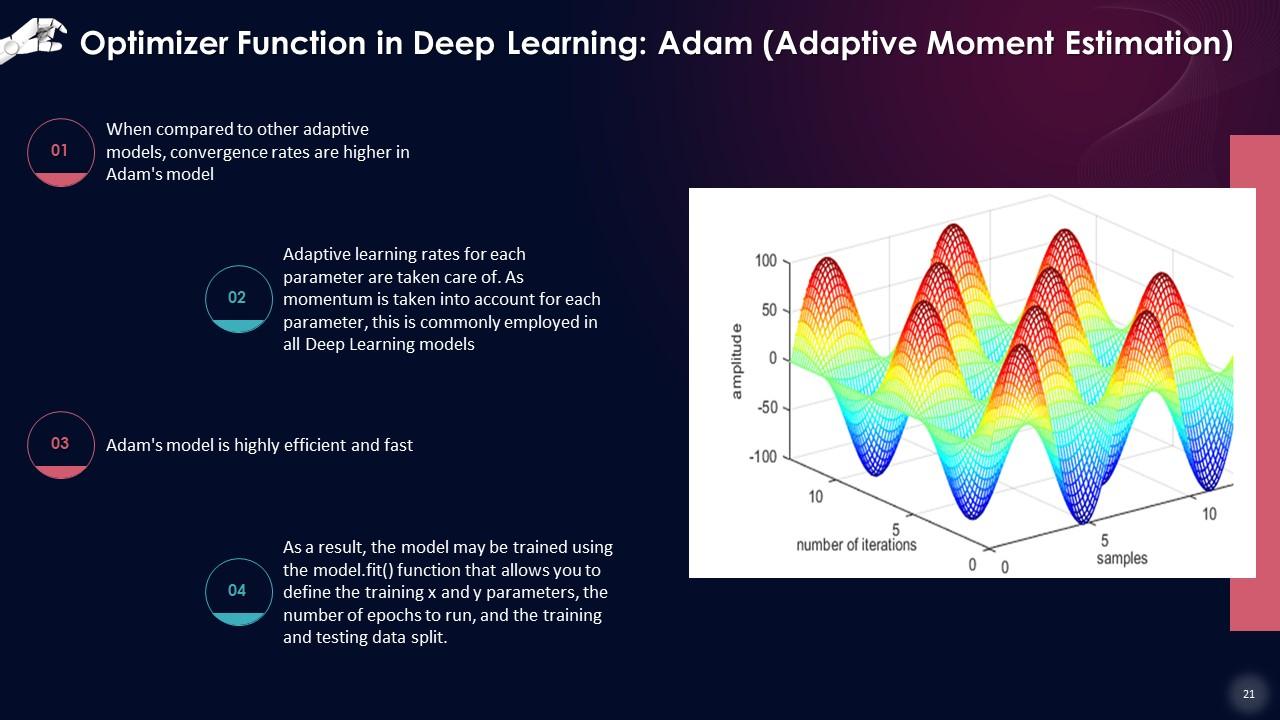

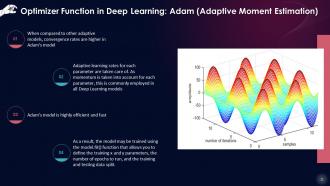

Slide 21

This slide describes that when compared to other adaptive models, convergence rates are higher in Adam's model. Adaptive learning rates for each parameter are taken care of. As momentum is taken into account for each parameter, this is commonly employed in all Deep Learning models. Adam's model is highly efficient and fast.

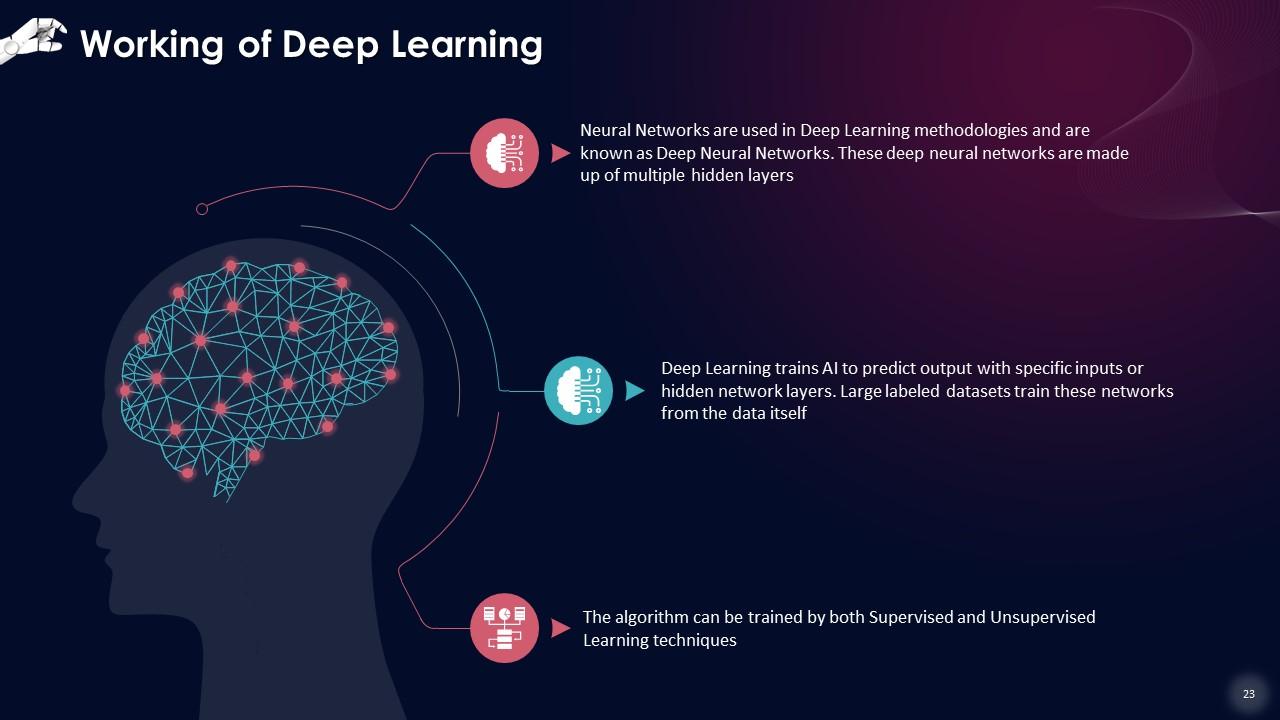

Slide 23

This slide explains the working of Deep Learning. Deep Neural Networks are made up of multiple hidden layers. Deep Learning trains AI to predict output with specific inputs or hidden network layers. Large labeled datasets train these networks from the data itself.

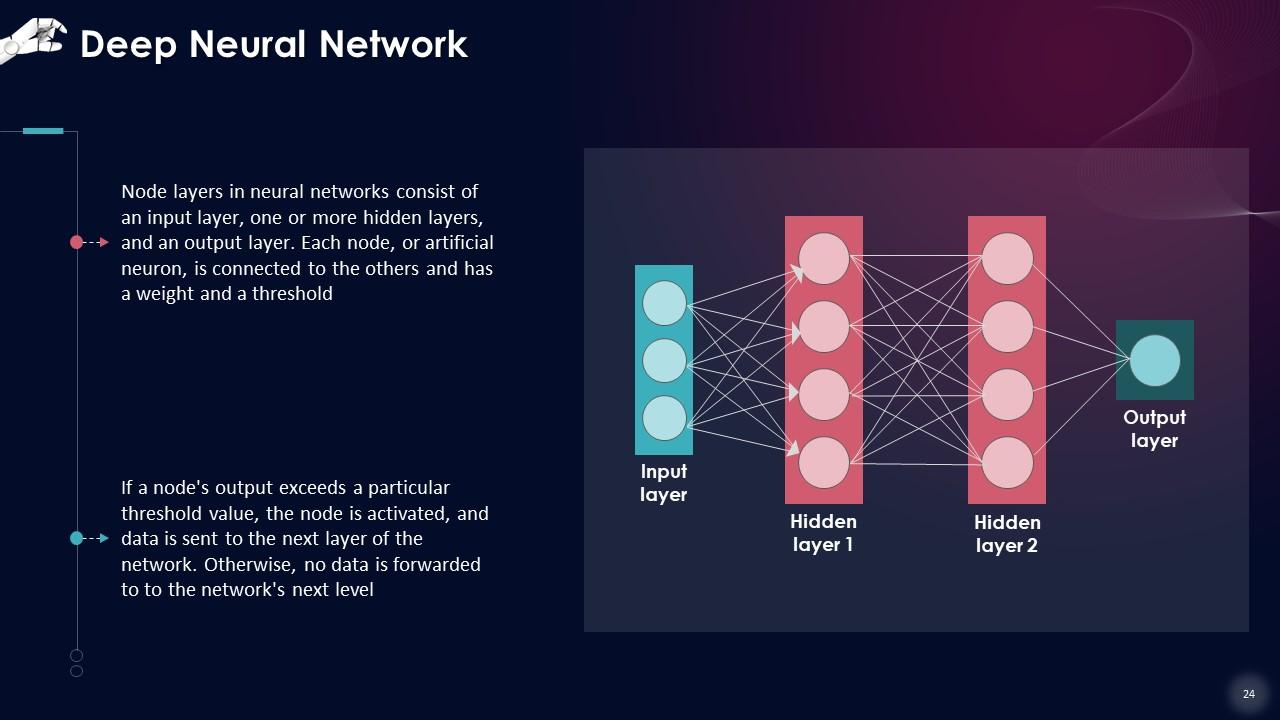

Slide 24

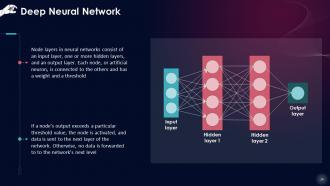

This slide discusses structure of a deep neural network which consists of three types of node layers. These are, input layer, followed by one or more hidden layers, and finally an output layer.

Slide 25

This slide talks about the Deep Learning technique implemented using numerous neural networks or hidden layers that help understand the images thoroughly to make correct predictions.

Instructor’s Notes: This method works well with huge, complex datasets. Deep Learning becomes incapable of working with new data if it is insufficient or incomplete.

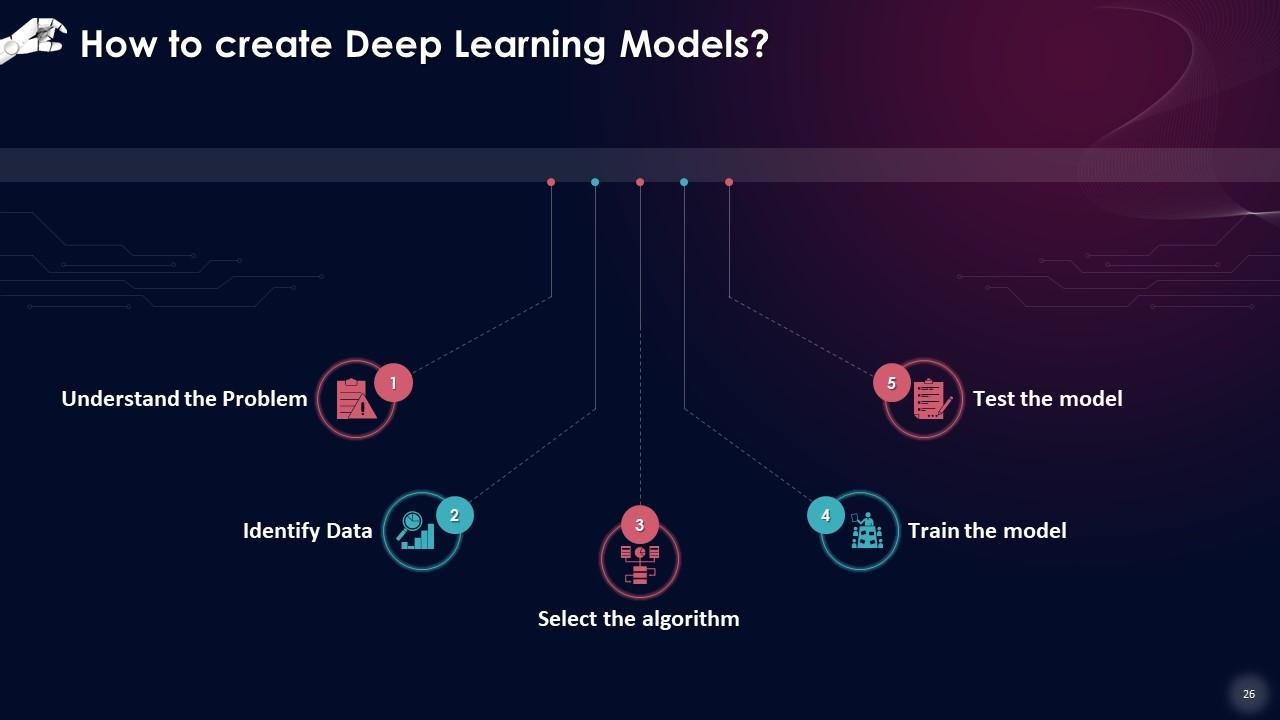

Slide 26

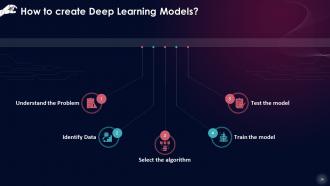

This slide illustrates the step-by-step process of creating deep learning models. The steps include understanding the problem, identifying data, selecting the algorithm, training the model, and testing the model.

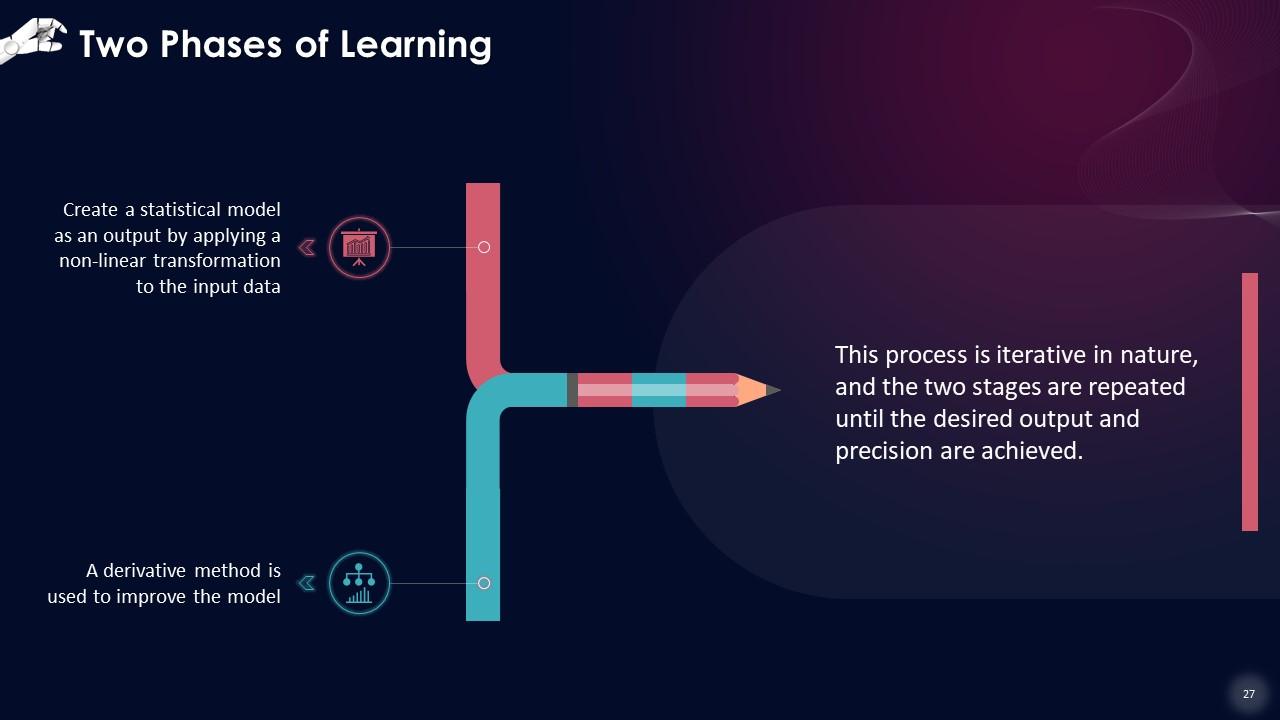

Slide 27

This slide depicts the two phases of operations in deep learning that are, creating a statistical model as an output by applying a non-linear transformation to the input data, and a derivative method to improve the model.

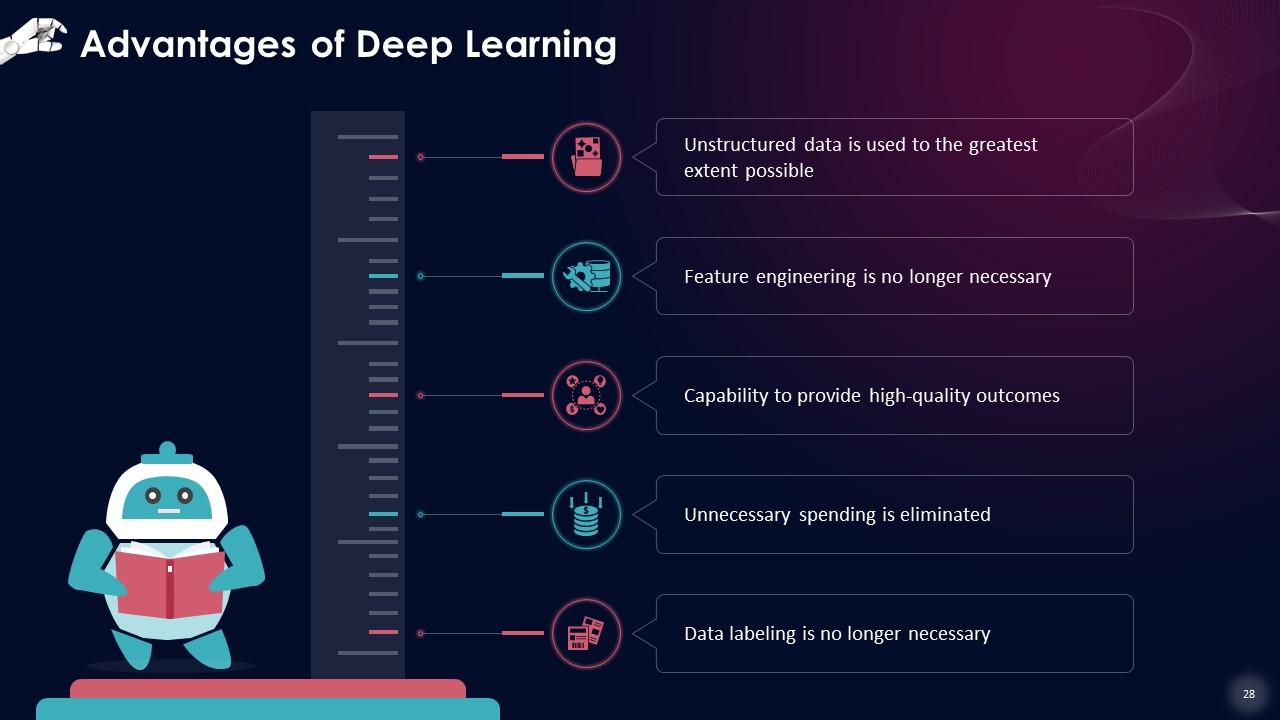

Slide 28

This slide lists advantages of Deep Learning, such as Unstructured data being used to the greatest extent possible, Feature engineering is no longer necessary, Capability to provide high-quality outcomes, Unnecessary spending being eliminated, and Data labeling is no longer required.

Instructor Notes:

- Unstructured data is used to the greatest extent possible: You can train Deep Learning algorithms using varied data types while still obtaining insights that are relevant to the training goal. For example, you may use deep learning algorithms to find any current relationships between industry analyses, social media activity, and other factors to forecast a particular organization's stock price in the future

- Feature engineering is no longer necessary: One of the major advantages of employing a Deep Learning technique is its ability to perform feature engineering independently. In this technique, an algorithm examines the data for correlated qualities and then combines it to encourage quicker learning without being expressly prompted. This skill allows data scientists to save a substantial amount of time

- Capability to provide high-quality outcomes: A Deep Learning model, if properly trained, can perform thousands of regular, repetitive tasks in a fraction of the time it would take a human. Furthermore, the work's quality never falls unless the training data comprises raw data that does not correspond to the situation

- Unnecessary spending is eliminated: Recalls are very costly, and in some sectors, a recall may cost a company millions of dollars in direct expenditures. Subjective faults that are difficult to train, such as tiny product labeling problems, can be recognized using Deep Learning

- Data labeling is no longer necessary: Data labeling can be a pricey and time-consuming task. The need for well-labeled data becomes irrelevant with a deep learning method since the algorithms excel at learning without guidelines

Slide 29

This slide lists applications of Deep Learning in the real world. These include detecting developmental delay in children, colorization of black & white images, adding sound to silent movies, pixel restoration, and Sequence Generation or Hallucination.

Slide 30

This slide states that one of the finest applications of Deep Learning is in the early detection and course correction of infant and child-related developmental disorders. MIT's Computer Science and AI Laboratory and Massachusetts General Hospital's Institute of Health Professions have created a computer system that can detect language and speech disorders even before kindergarten, when most cases typically emerge.

Slide 31

This slide describes image colorization i.e. the technique of taking grayscale photos and producing colorized images that represent the semantic shades and tones of the input. Traditionally, this technique was carried out by hand and required human labor. Today, however, Deep Learning Technology is used to color the image by applying it to objects and their context within the photograph.

Slide 32

This slide states that to identify acceptable sounds for a scene, a Deep Learning model prefers to correlate video frames with a database of pre-recorded sounds. Deep Learning models then use these videos to determine the optimal sound for the video.

Slide 33

This slide discusses that in 2017, Google Brain researchers created a Deep Learning network to determine a person's face from very low-quality photos of faces. “Pixel Recursive Super Resolution” was the name given to this approach, and it considerably improves the resolution of photographs, highlighting essential features just enough for identification.

Slide 34

This slide showcases that sequence generation or hallucination works by creating unique footage by seeing other video games, understanding how they function, and replicating them using Deep Learning techniques like recurrent neural networks. Deep Learning hallucinations can produce high-resolution visuals from low-resolution photos. This technique is also used to restore historical data from low-resolution quality photographs to high-resolution images.

Slide 35

This slide describes that the Deep Learning approach is incredibly efficient for toxicity testing for chemical structures; specialists used to take decades to establish the toxicity of a particular structure, but with a deep learning model, toxicity can be determined quickly (could be hours or days, depending on complexity).

Slide 36

This slide showcases that a cancer detection Deep Learning model contains 6,000 parameters that might help estimate a patient's survival. Deep learning models are efficient and effective for breast cancer detection. The Deep Learning CNN model can now identify and categorize mitosis in patients. Deep neural networks aid in the study of the cell life cycle.

Slide 37

This slide states that based on the dataset used to train the model, Deep Learning algorithms can forecast buy and sell calls for traders. This is beneficial for short-term trading and long-term investments based on the available attributes.

Slide 38

This slide describes that Deep Learning algorithms classify consumers based on prior purchases and browsing behavior and offer relevant and tailored adverts in real-time. We can see this in action: If you search for a particular product on a search engine, you will be shown relevant content of allied categories in your news feed also.

Slide 39

This slide showcases that Deep Learning offers a promising answer to the problem of fraud detection by allowing institutions to make the most use of both historical customer data and real-time transaction details gathered at the moment of the transaction. Deep Learning models may also be used to determine which products and marketplaces are more vulnerable to fraud and to be extra cautious in such circumstances.

Slide 40

This slide states that seismologists attempt to forecast the earthquake, but it is far too complicated. One incorrect prediction costs both the people and the government a lot of money. There are two waves in an earthquake: the p-wave (travels quickly but does less damage) and the s-wave (travels slow but the damage is high). It isn't easy to make judgments days in advance, but using deep learning techniques, we can forecast the outcome of each wave based on prior data crunching and experiences. This may take hours, but it is rapid enough to serve as a useful warning that can save lives and prevent damage.

Slide 41

This slide gives an overview of Deep Fakes, which refers to modified digital material, such as photos or videos, in which the image or video of a person is replaced with the resemblance of another person. Deep Fake is one of the most severe concerns confronting modern civilization.

Instructor Notes:

In 2018, a spoof clip of Barack Obama was made, using phrases he never spoke. Furthermore, Deep Fakes have already been used to distort Joe Biden's footage showing his tongue out in the US 2020 election. These detrimental applications of deepfakes can significantly influence society and result in the spreading of false information, particularly on social media.

Slide 42

This slide states some of the disadvantages of Deep Learning such as: It needs a vast quantity of data to outperform other strategies of decision making. There is no conventional theory to aid you in choosing the correct deep learning tools since it necessitates an understanding of topology, training technique, and other characteristics. As a result, it is harder to adopt by less competent individuals.

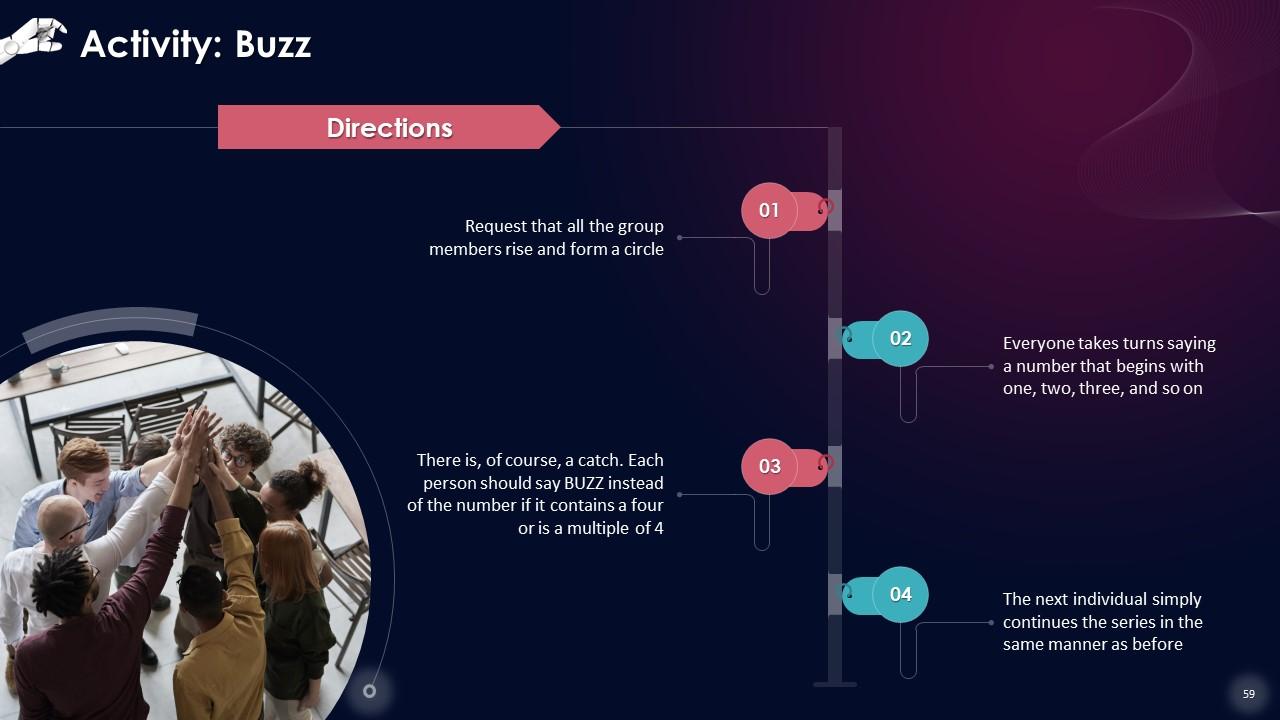

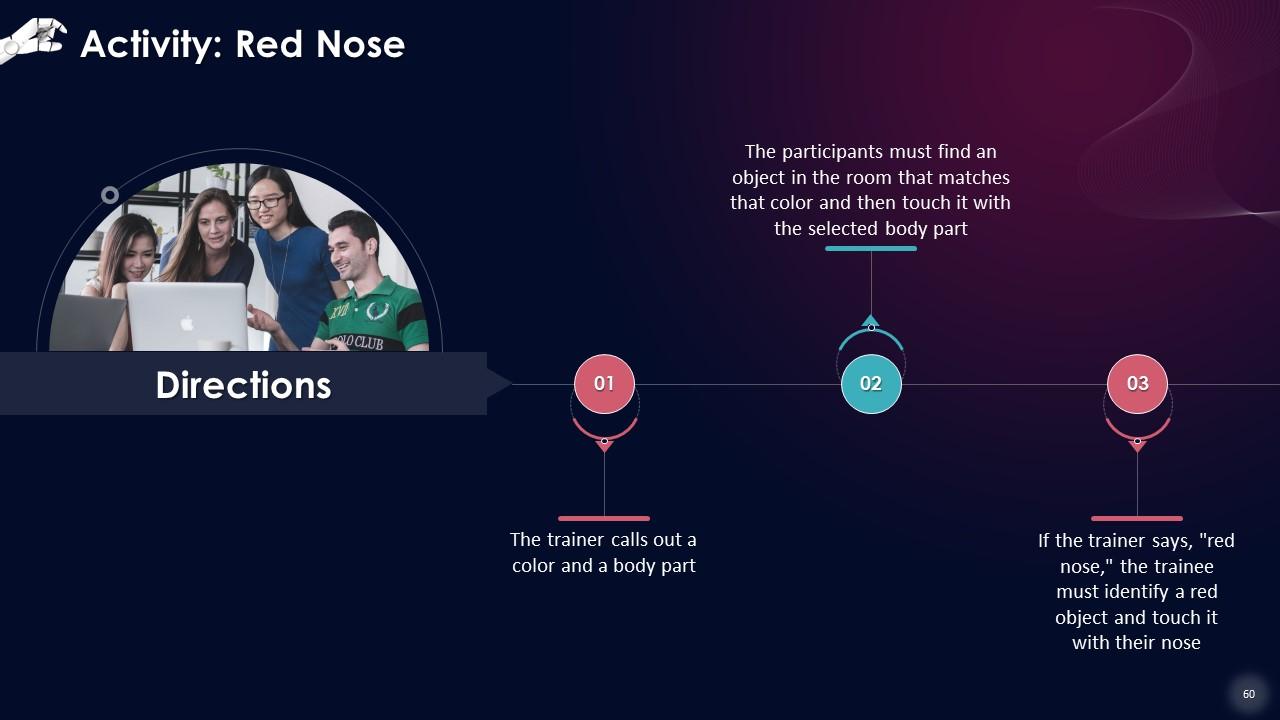

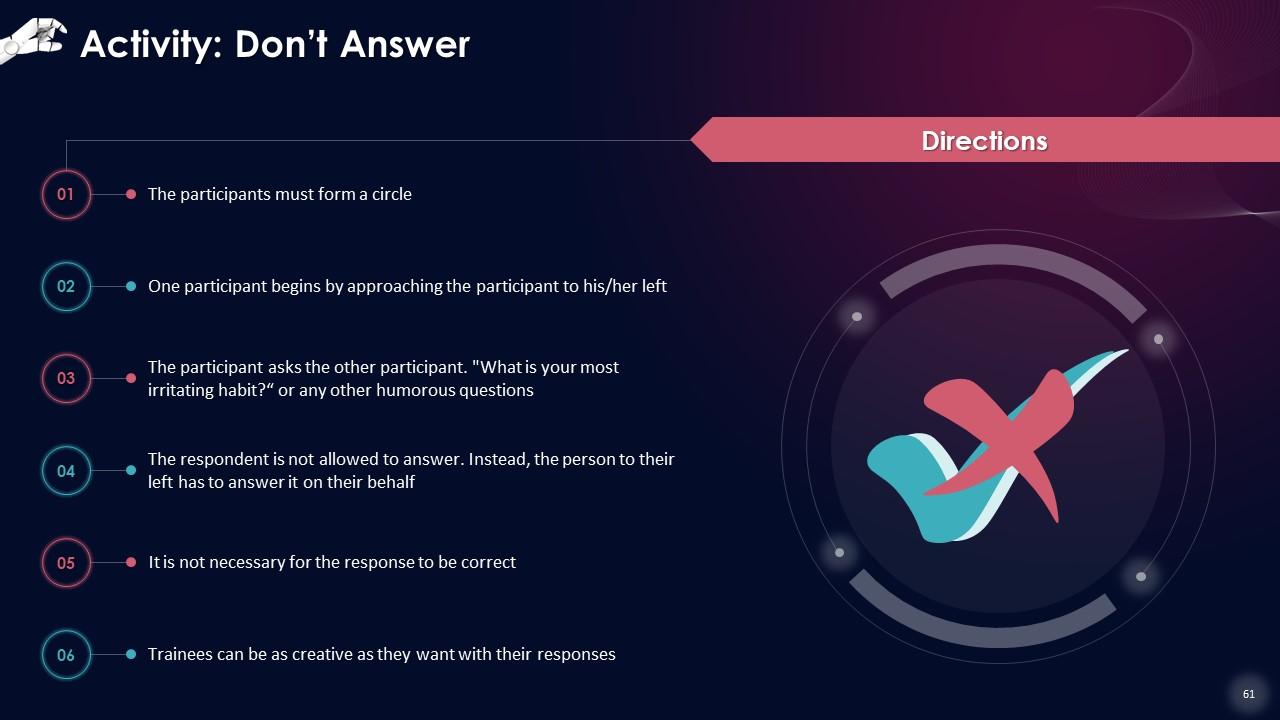

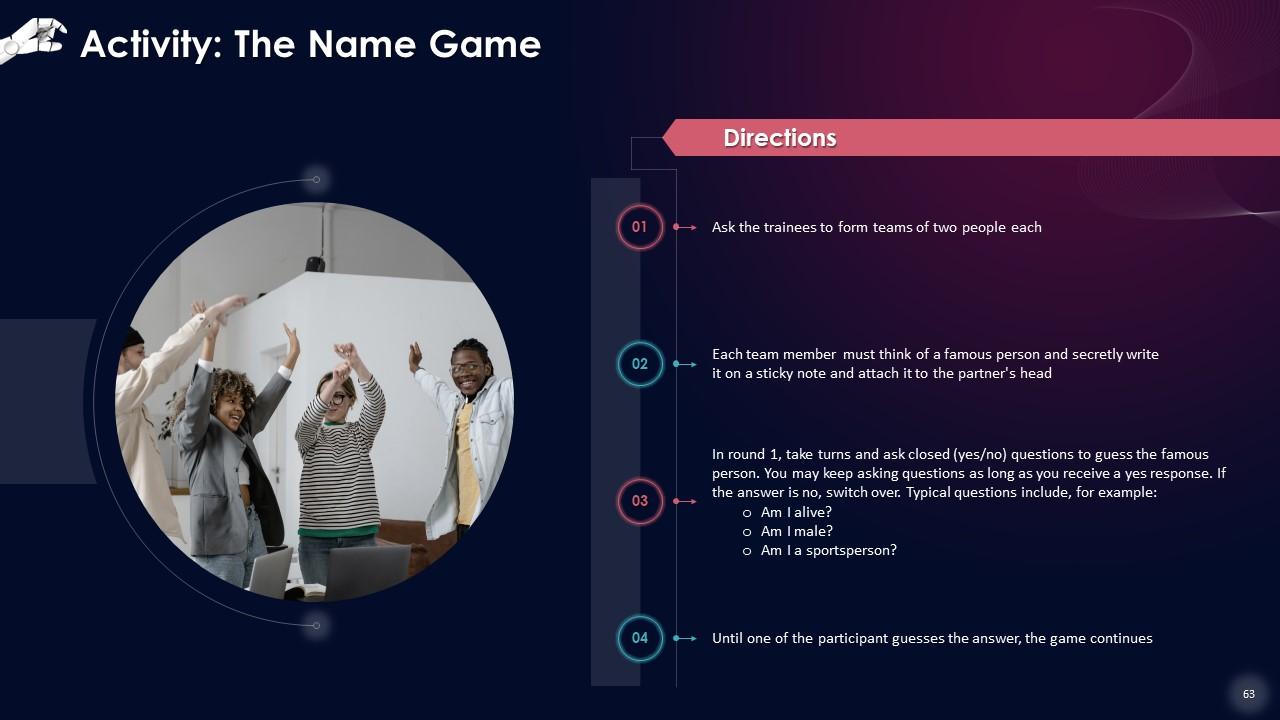

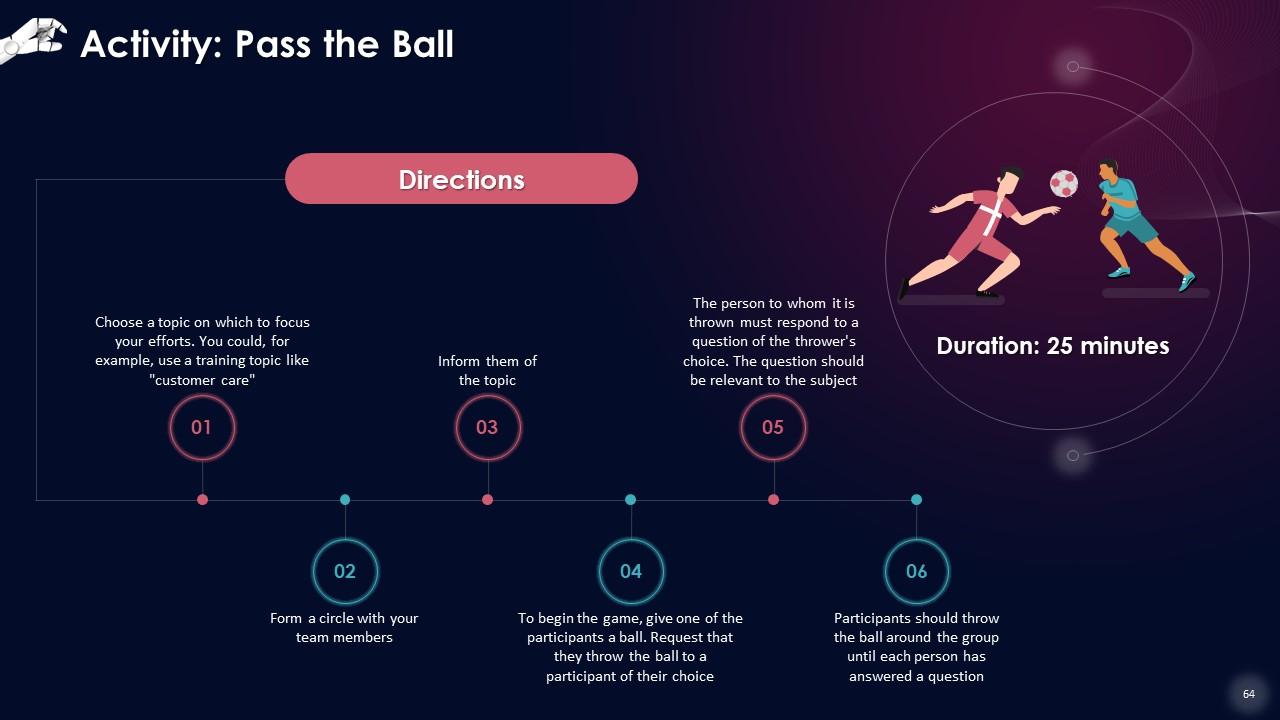

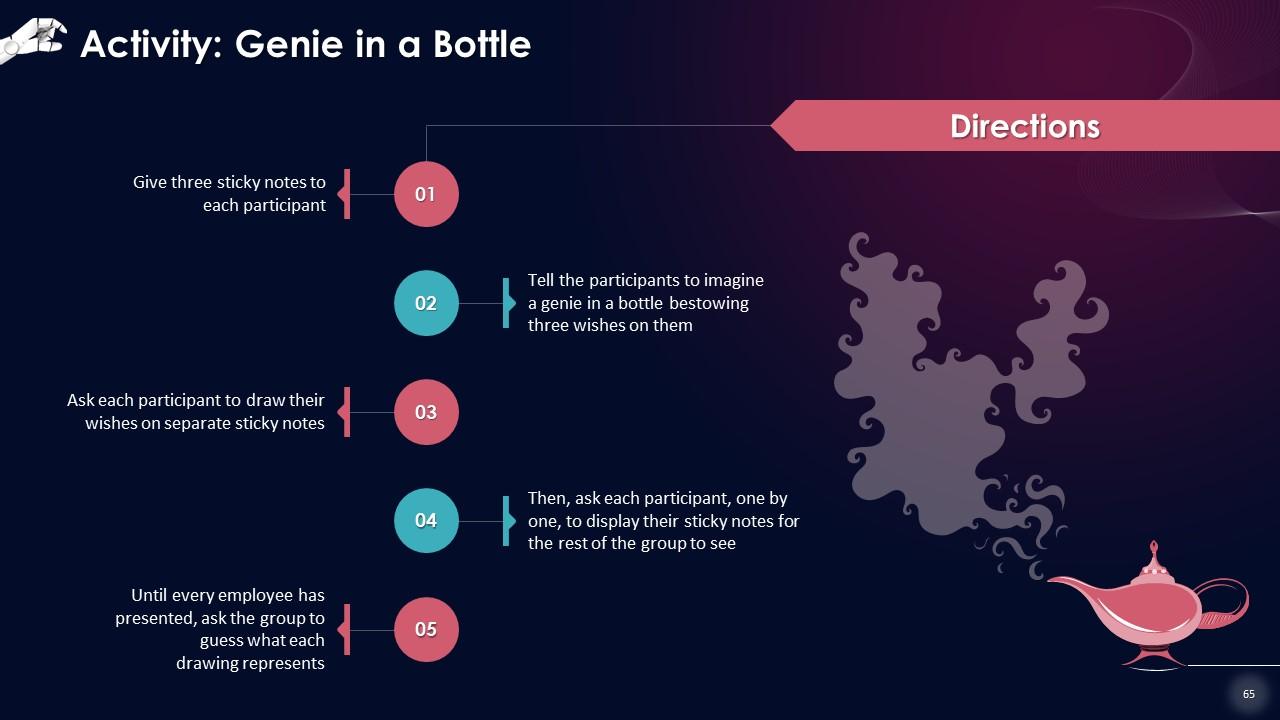

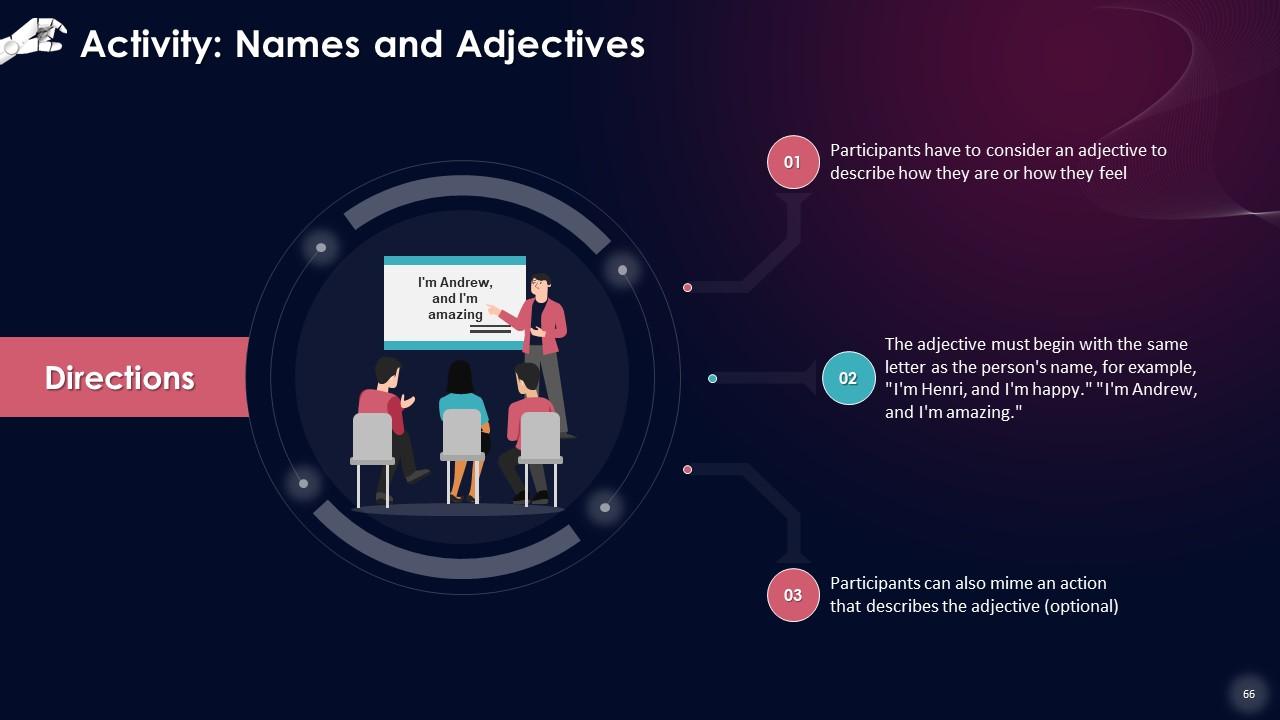

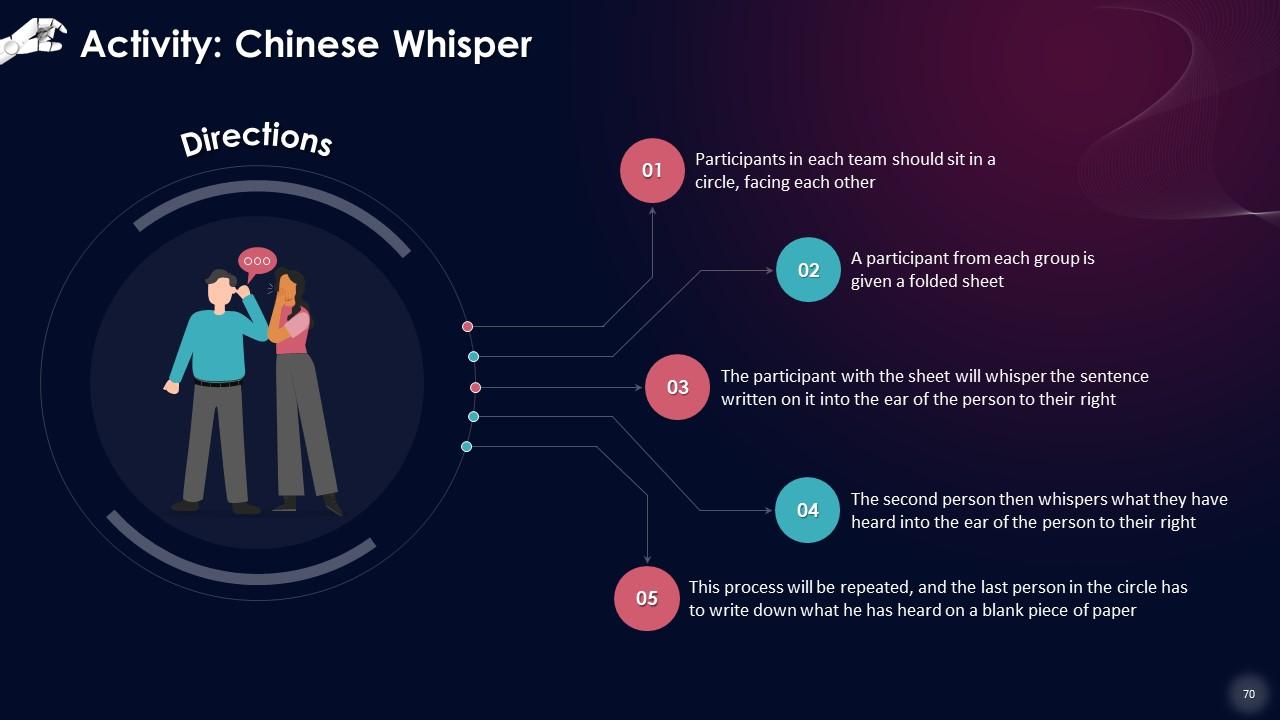

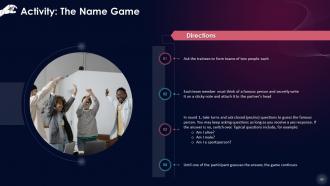

Slide 58 to 73

These slides contain energizer activities to engage the audience of the training session.

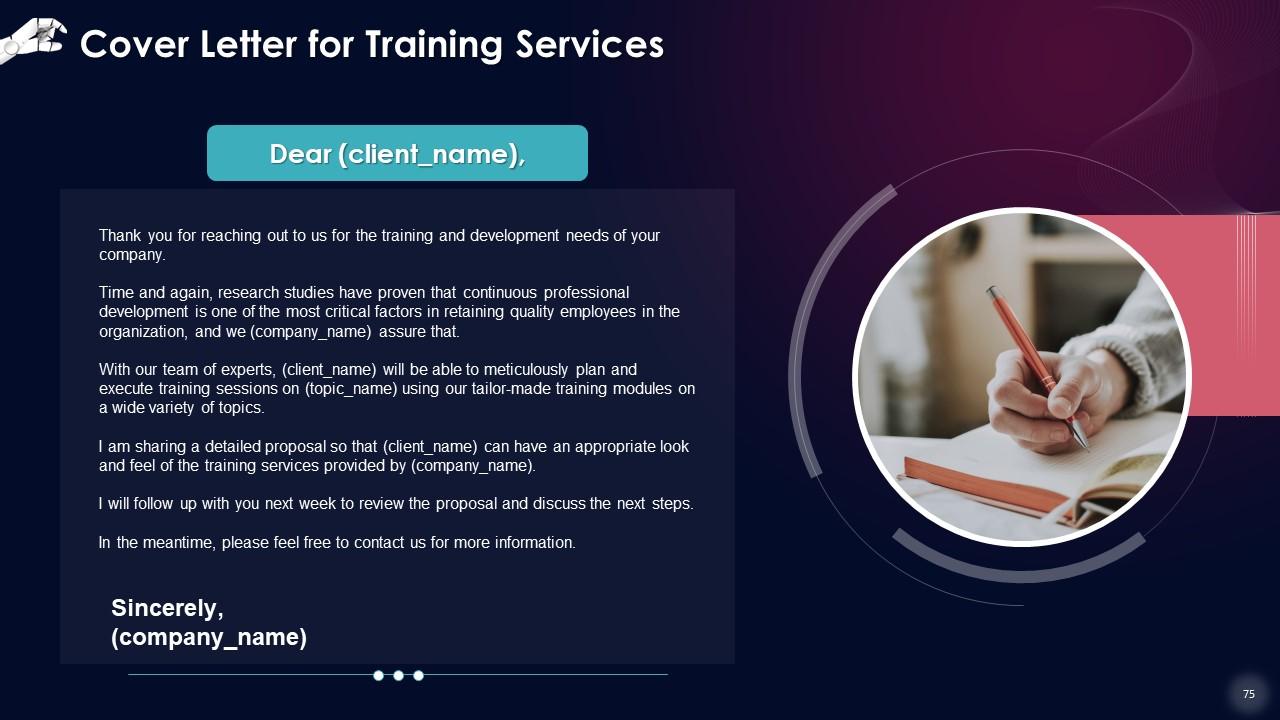

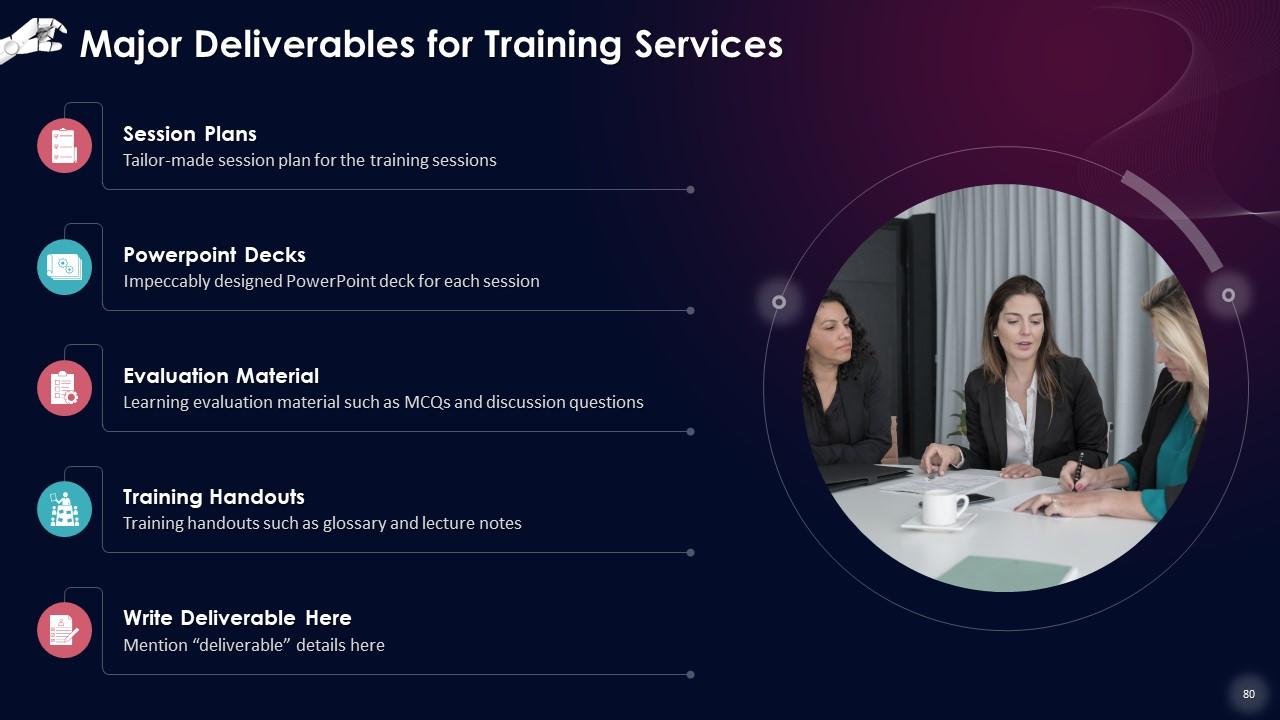

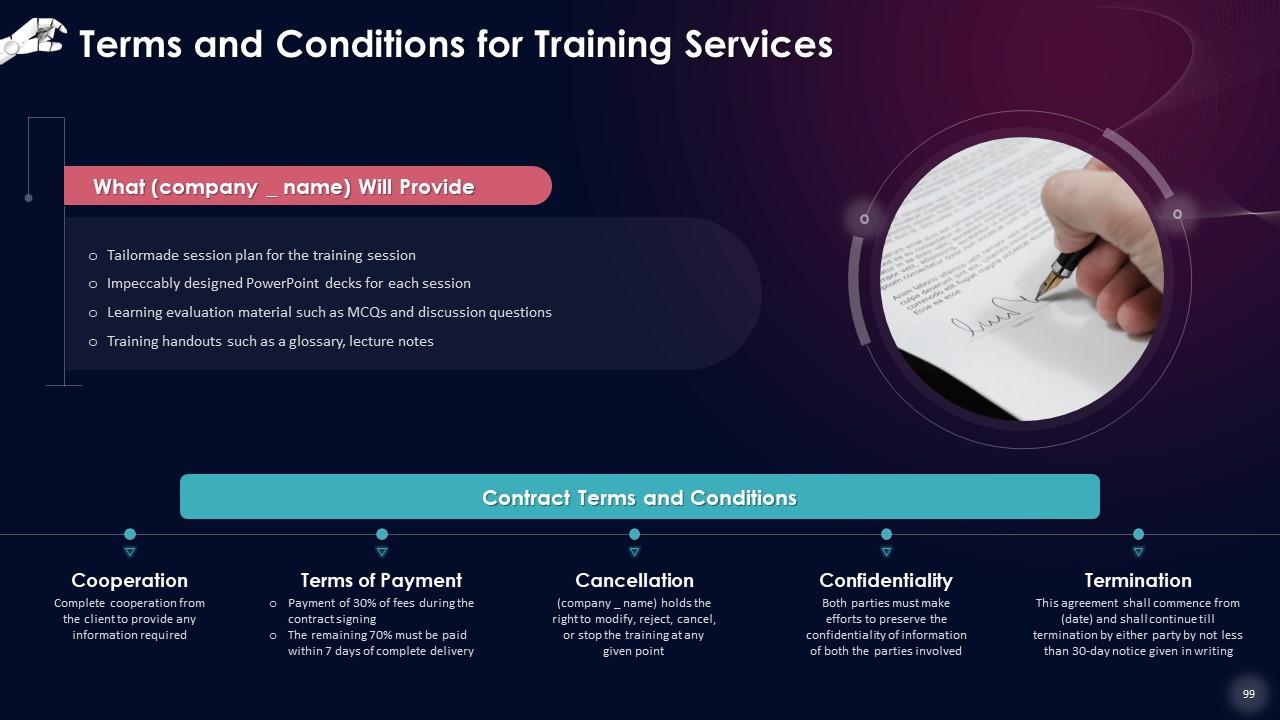

Slide 74 to 101

These slides contain a training proposal covering what the company providing corporate training can accomplish for the client.

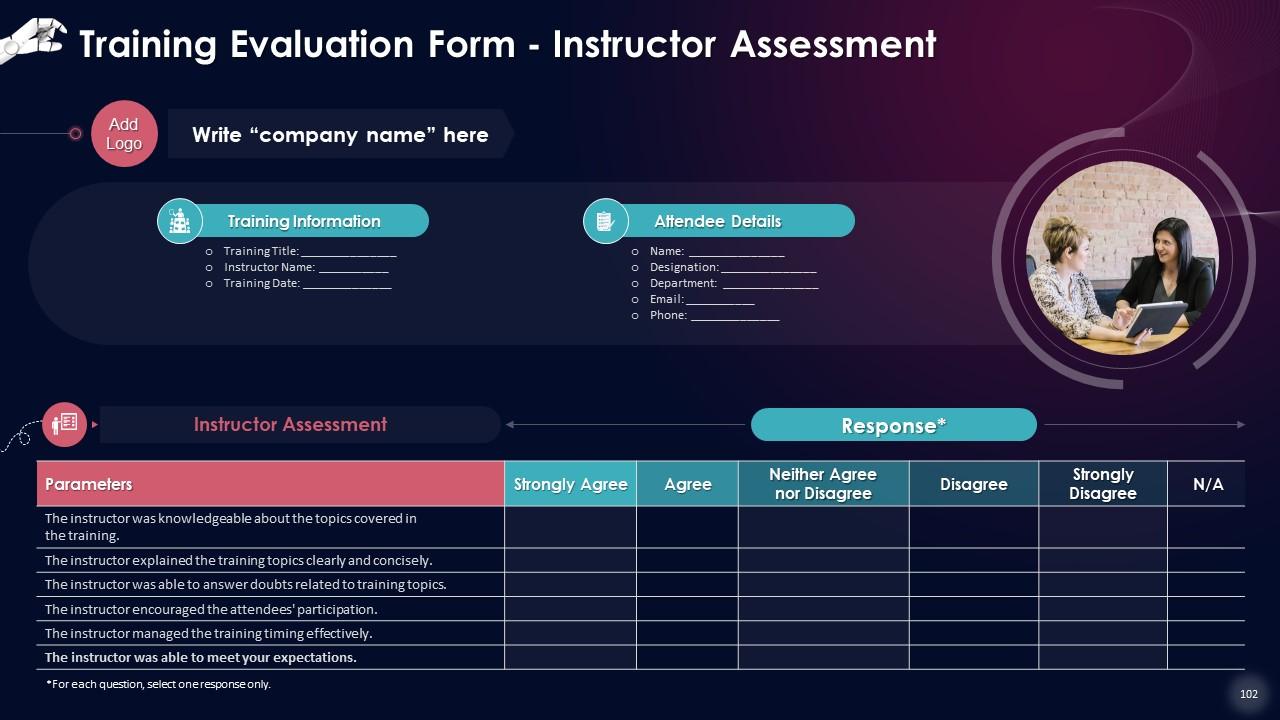

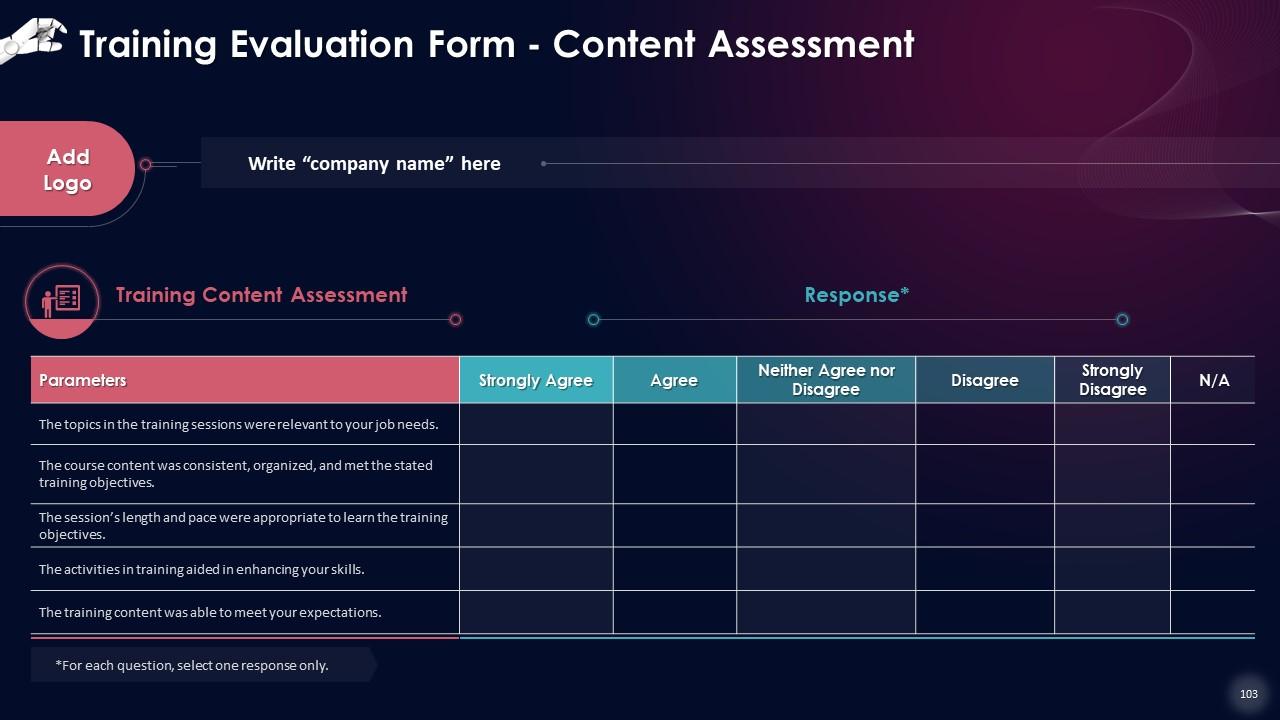

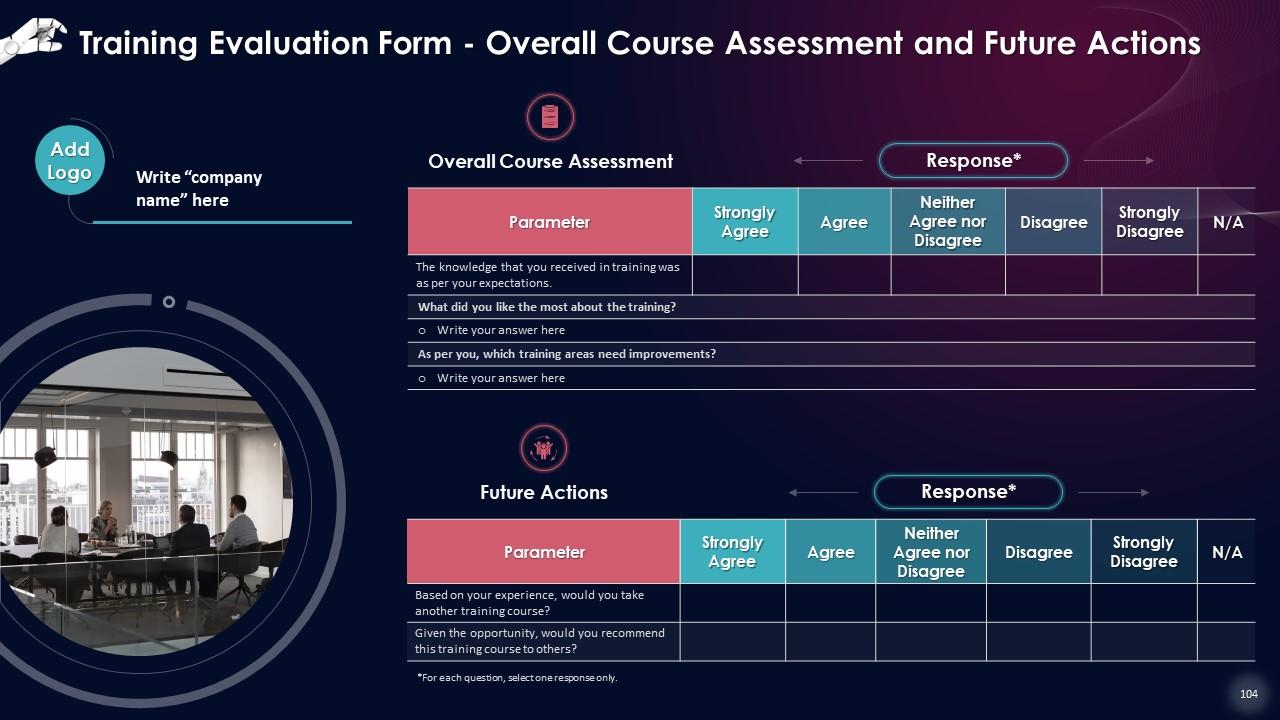

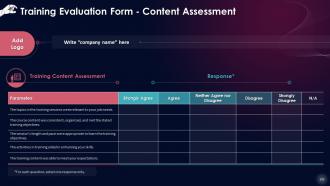

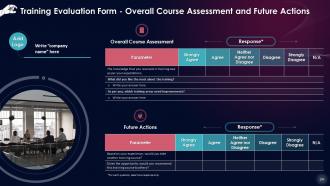

Slide 102 to 104

These slides include a training evaluation form for instructor, content and course assessment.

Deep Learning Mastering The Fundamentals Training Ppt with all 109 slides:

Use our Deep Learning Mastering The Fundamentals Training Ppt to effectively help you save your valuable time. They are readymade to fit into any presentation structure.

-

I never had to worry about creating a business presentation from scratch. SlideTeam offered me professional, ready-made, and editable presentations that would have taken ages to design.

-

Awesome presentation, really professional and easy to edit.